ECE Research Group & Lab Spotlight: OLIVES

Dec 09, 2022 — Atlanta, GA

ECE Professor Ghassan AlRegib’s OLIVES research group in the Georgia Tech School for Electrical and Computer Engineering is at the forefront of today’s computer vision and visual machine learning research.

The human brain is made up of around 100 billion neurons, meaning it can process complex, multi-parallel information at a tantalizing speed. Through the sense of sight alone, humans can gather a treasure trove of information in the blink of an eye and apply that information to make split-second decisions. With ‘smart’ technology becoming more and more ingrained in everyday life and further trusted to keep humans safe, it is crucial for machines to have human-like vision and rapid information processing capabilities.

Professor Ghassan AlRegib’s OLIVES (Omni Lab for Intelligent Visual Engineering and Science) research group in the Georgia Tech School for Electrical and Computer Engineering (ECE) is at the forefront of today’s computer vision and visual machine learning research and its deployment in everyday life applications.

“We’re providing machines with the robust algorithms and datasets they need to better see, learn from, and respond to the world around them,” said AlRegib.

Working in the areas of autonomous driving, machine learning in the wild (outside a lab setting), subsurface interpretation, and healthcare, the team is advancing the field with safe, reliable, and predictive tools. Read about a few exciting research projects and themes taking place in OLIVES below.

Autonomous Vehicles (AVs) and Smart Mobility in Any Condition

“As researchers endeavor for higher levels of autonomy in technology, safety-critical functions demand powerful algorithms,” said AlRegib. “Without understanding data, machine learning is nothing. Autonomous driving may be the most obvious current example of this in practice.”

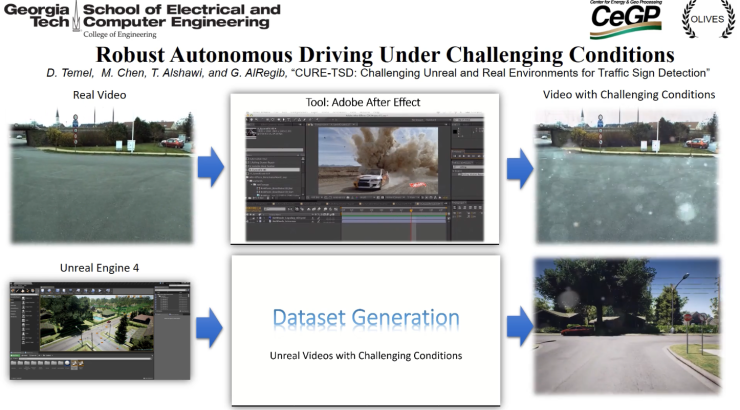

For autonomous driving to work, a vehicle’s cameras must supply the car’s computers with information to make incredibly fast decisions. However, the majority of existing data and benchmarks are limited in terms of data available in challenging environmental conditions. For example, think of how a human driver adapts to driving in rain or sun glare conditions.

To overcome the shortcomings in existing research, AlRegib’s team introduced the most comprehensive traffic sign detection dataset every published that contains controlled challenging conditions. It facilitates the building of deep learning models — a form of machine learning with algorithms inspired by the structure and function of the brain — in solving various computer vision tasks that help the car make the best and safest decision possible no matter the weather. Currently the group is working with a leader in AVs to publish a new comprehensive dataset.

WATCH: Robust Autonomous Driving Under Challenging Conditions DEMO

Manufacturing Trust in Deployed Intelligent Systems

“Interpretability and trust are precursors to effectively deploy intelligent systems in everyday life applications,” said AlRegib. “Trust in a system translates to its ability to explain, generalize, and become reliable. Systems must know what they don’t know, and more importantly, when they don't know.”

In this context, explainability is the act of involving humans in a system’s decision-making process by contextually providing reasons for its internal processes. Reliability then requires systems to be reliable under all conditions of deployment including when they encounter new aberrant events not seen previously. This is where generalizability – the ability of a trained model to classify or forecast unseen data – is used to make the best decision possible. Equally important is the uncertainty score that is associated with the decision.

The OLIVES group’s state-of-the-art research introduces a two-stage decision making process that is coined as Introspective Learning. The first stage is a fast and instinctive process and can be available in any existing system that makes a decision.

The second stage is a slower reflection stage where the system is asked to reflect on its decision by considering and evaluating all available choices. The team demonstrates the value of such processes in generalizability and uncertainty estimation with applications in robust recognition and prediction confidence calibration. This concept is further expanded to detect adversarial and out-of-distribution data during deployment.

The fields of contextually relevant explanations are tackled in published findings in the Signal Processing Magazine that provides a user-centric policy for intelligent systems. The team’s findings have been demonstrated across several applications ranging from AVs to medical imaging, entertainment systems, and subsurface imaging.

WATCH: Self-Aware Navigation Agent DEMO

Human-In-the-Loop Solutions to Big Data

The amount of data that researchers can capture and generate in today’s world can be put to work in nearly every field imaginable. For example, the OLIVES lab was the first to introduce modern visual machine learning to seismic interpretation — data used to study earthquakes or vibrations of the earth.

In recent years, AlRegib helped established a new consortium called Machine Learning for Seismic (ML4Seismic) designed to foster research partnerships aimed to drive innovations in artificial-intelligence assisted seismic imaging.ML4Seismic also provides smart analyses of the Earth’s subsurface for geothermal and oil and gas applications in the energy sector.

Further, the OLIVES team’s work extends to healthcare, specifically ophthalmology. One of their first products is aportable eye exam device that can provide access to eyecare anytime and anywhere using a headset and a cloud-based AI technology. AlRegib has partnered with the Retina Consultants of Texas to release a comprehensive ophthalmology dataset with multiple data modalities and have developed an automated framework to detect relative afferent pupillary defect (RAPD) in eyes.

“In a way our research comes full circle with our ophthalmology work. We can now utilize computer vision to literally benefit human vision,” said AlRegib.

In such applications, the domain expert — a person with a strong theoretical foundation in the specific field for which the data was collected — is at the center, and the expert’s interactions with the data and the decision-making process is instrumental. Hence, intelligent solutions must incorporate a two-way communication between the domain expert and the decision-making system. The OLIVES team has introduced solutions to this through active learning in both the fields of seismic interpretation and ophthalmology healthcare.

“By incorporating humans and their interactions with Big Data, we are able to effectively analyze and better understand Earth’s subsurface structures, provide personalized healthcare, and capitalize on the greatest value domain experts provide, which is their knowledge and experience,” said AlRegib.

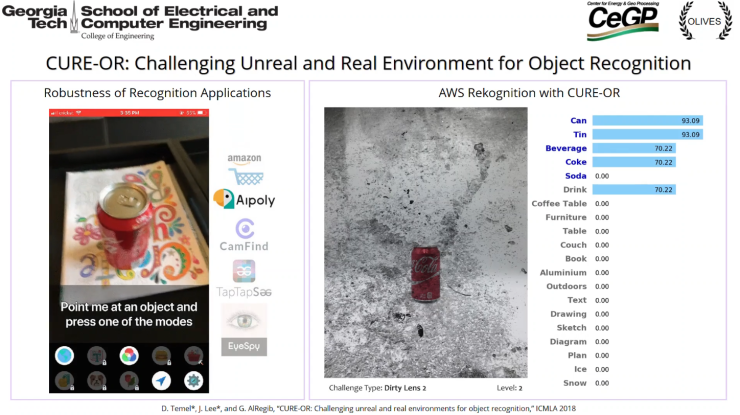

WATCH: Challenging Unreal and Real Environment for Object Recognition DEMO

Want to learn more? Check out the OLIVES website.

ECE Professor Ghassan AlRegib and postdoctoral research fellow Mohit Prabhushankar discussing the group’s latest machine learning work in autonmous vechicles (AVs). AVs are one of the most obvious examples of OLIVES research in every day life.

Example of the Challenging Unreal and Real Environment for Object Recognition (CURE-OR) dataset in action.

Example of the OLVIES group’s autonomous vehicle dataset in action.

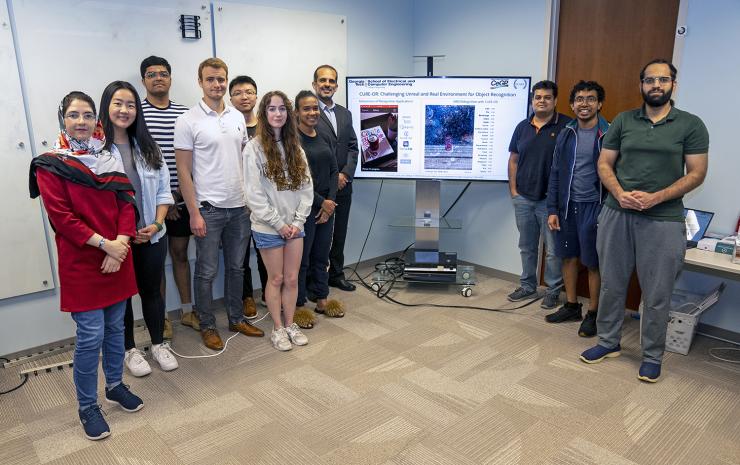

Members of the OLIVES team in the lab (L-R): Ghazal Kaviani (Ph.D. candidate), Jinsol Lee (Ph.D. candidate), unknown, Ryan Benkert (Ph.D. candidate), Chen Zhou (Ph.D. candidate), Zoe Fowler (Ph.D. candidate), Yash-yee Logan (Ph.D. candidate), Professor Ghassan AlRegib, Mohit Prabhushankar (postdoctoral research fellow), Kiran Kokilepersaud (Ph.D. candidate), and Ahmad Mustafa (Ph.D. candidate).

Dan Watson

dwatson@ece.gatech.edu