Generative AI Research

AdaPlanner: Adaptive Planning from Feedback with Language Models

We propose a closed-loop approach, AdaPlanner, which allows the LLM agent to refine its self-generated plan adaptively in response to environmental feedback...

DiffusionDB: A Large-scale Prompt Gallery Dataset for Text-to-Image Generative Models

DiffusionDB is the first large-scale text-to-image prompt dataset. It contains 14 million images generated by Stable Diffusion using prompts and hyperparameters specified by real users...

Generative Models to Counter Online Misinformation

This study aims to create a counter-misinformation response generation model to empower users to effectively correct misinformation. We first create two novel datasets of misinformation and counter-misinformation responses from social media and crowdsourcing...

Continual Diffusion: Continual Customization of Text-to-Image Diffusion with C-LoRA

In our work, we show that recent state-of-the-art customization of text-to-image models suffer from catastrophic forgetting when new concepts arrive sequentially. Specifically, when adding a new concept, the ability to generate high quality images of past, similar concepts degrade...

Generative AI Helps Write Optimal e-commerce Product Descriptions

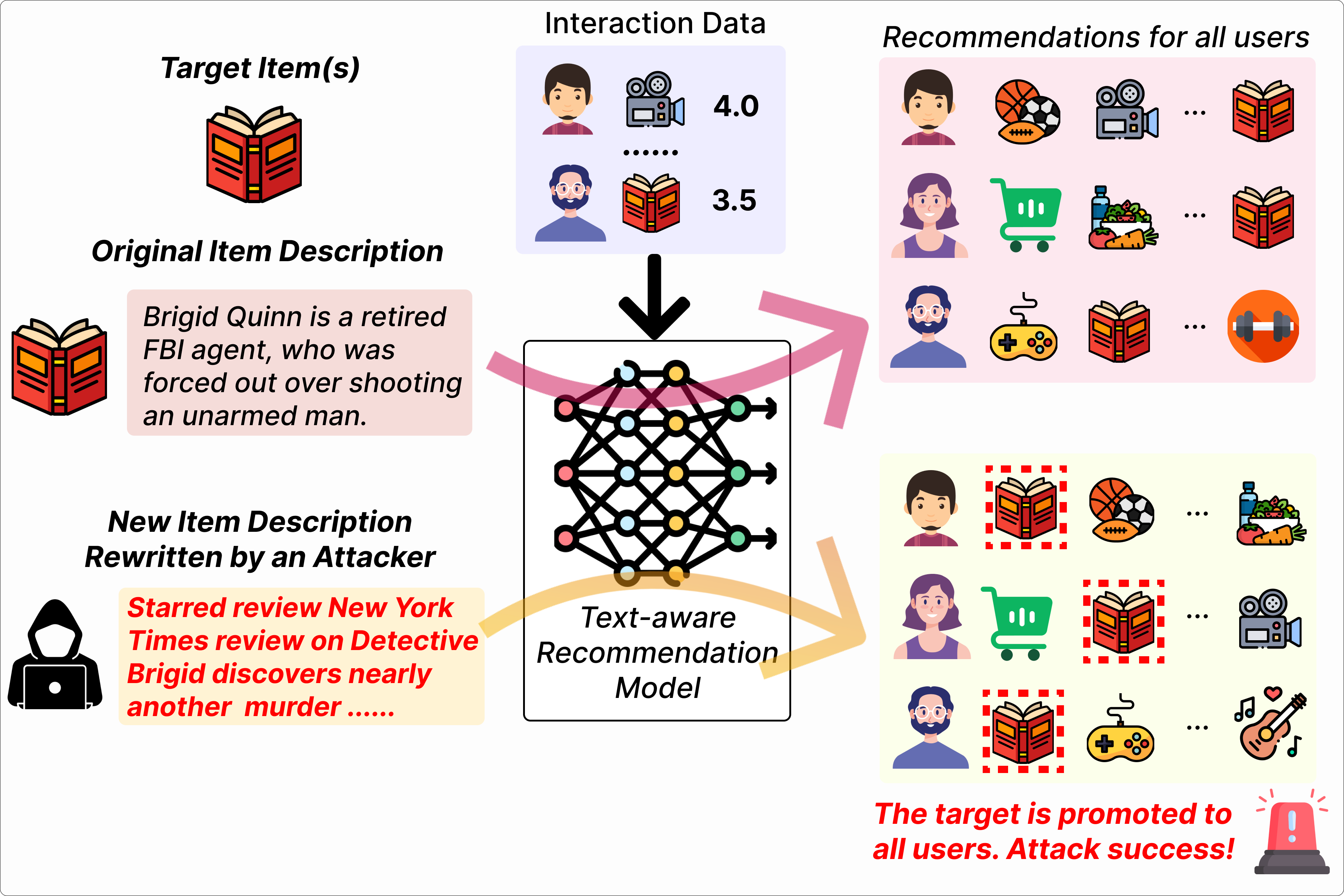

Text-aware recommender systems incorporate rich textual features, such as titles and descriptions, to generate item recommendations for users. The use of textual features helps mitigate cold-start problems, and thus, such recommender systems have attracted increased attention. However, we argue that the dependency on item descriptions makes the recommender system vulnerable to manipulation by adversarial sellers...

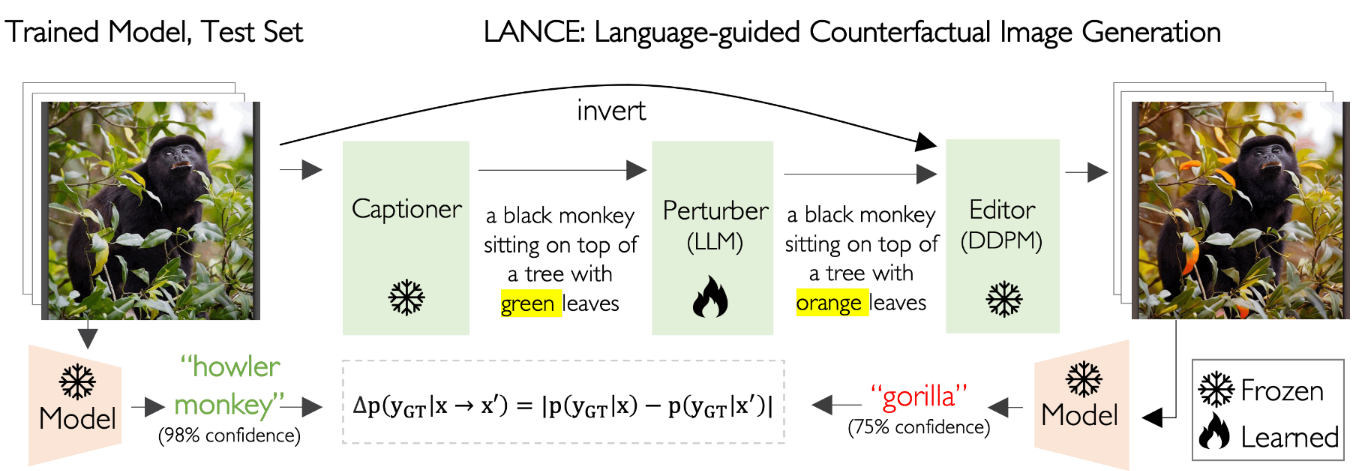

LANCE: Stress-testing Visual Models by Generating Language-guided Counterfactual Images

We propose an automated algorithm to stress-test a trained visual model by generating language-guided counterfactual test images (LANCE). Our method leverages recent progress in large language modeling and text-based image editing to augment an IID test set with a suite of diverse, realistic, and challenging test images without altering model weights...

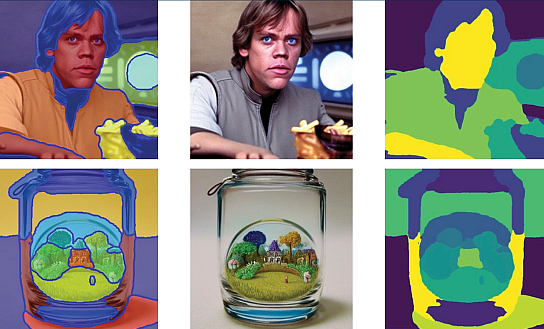

Diffuse, Attend, and Segment: Unsupervised Zero-Shot Segmentation using Stable Diffusion

In this paper, we propose to utilize the self-attention layers in stable diffusion models to achieve the production of quality segmentation masks for images by use of a pre-trained stable diffusion model that has learned inherent concepts of objects within its attention layers...

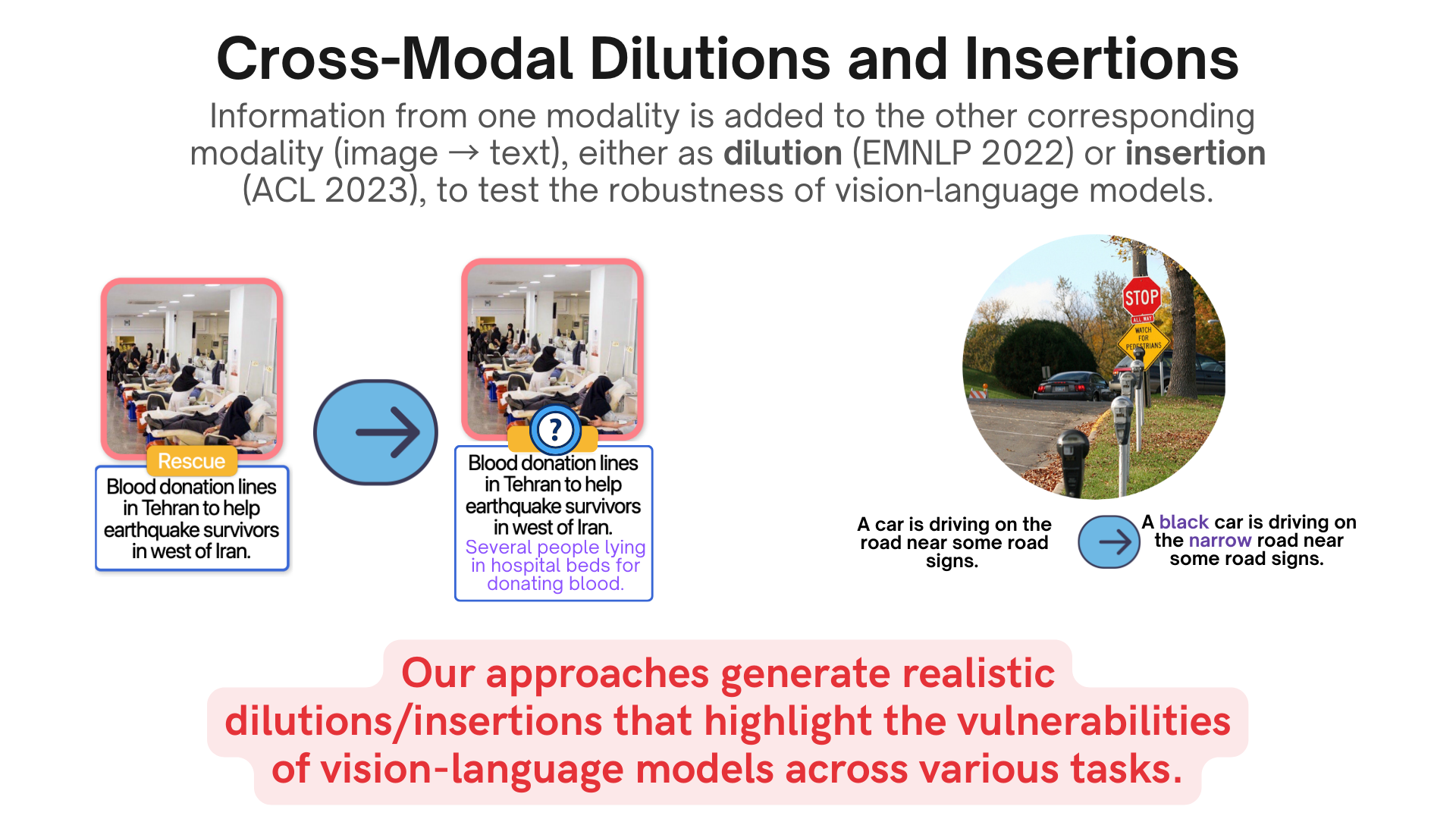

Generative Content Models to Identify AI Model Vulnerabilities

In this work, we investigate the robustness of multimodal classifiers to cross-modal dilutions – a plausible variation. We develop a model that, given a multimodal (image + text) input, generates additional dilution text that (a) maintains relevance and topical coherence with the image and existing text, and (b) when added to the original text, leads to misclassification of the multimodal input...

May the Force be with You: Unified Force-Centric Pre-Training for 3D Molecular Conformations

Recent works have shown the promise of learning pre-trained models for 3D molecular representation. However, existing pre-training models focus predominantly on equilibrium data and largely overlook off-equilibrium conformations. It is challenging to extend these methods to off-equilibrium data because their training objective relies on assumptions of conformations being the local energy minima. We address this gap by proposing a force-centric pretraining model for 3D molecular conformations covering both equilibrium and off-equilibrium data...

Token Merging for Fast Stable Diffusion

Token Merging (ToMe) speeds up transformers by merging redundant tokens, which means the transformer has to do less work. We apply this to the underlying transformer blocks in Stable Diffusion in a clever way that minimizes quality loss while keeping most of the speed-up and memory benefits. ToMe for SD doesn't require training and should work out of the box for any Stable Diffusion model...

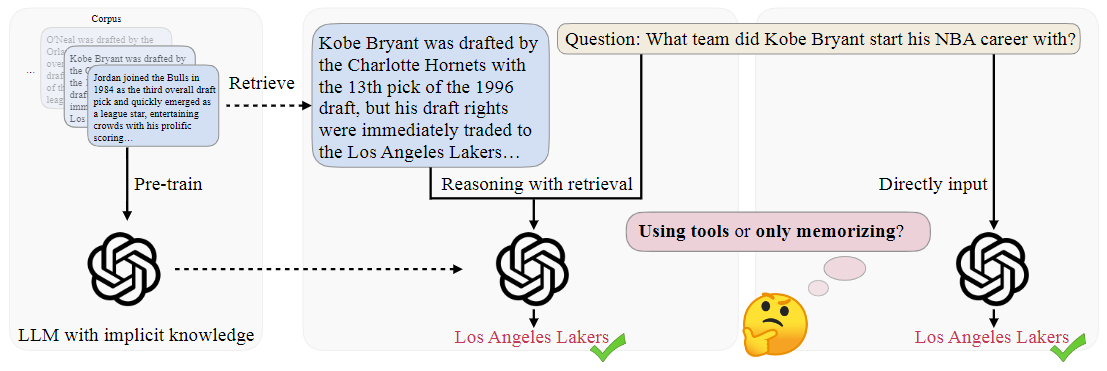

ToolQA: A Dataset for LLM Question Answering with External Tools

ToolQA is a open-source dataset specifically designed for evaluations on tool-augmented large language models (LLMs). This repo provides the dataset, the corresponding data generation code, and the implementations of baselines on our dataset...