New Energy Management Course Aims to Keep Georgia SMEs Competitive

Nov 11, 2025 —

The Ray C. Anderson Center for Sustainable Business (Center), in partnership with Georgia Tech Scheller College of Business Executive Education and the Georgia Manufacturing Extension Partnership at Georgia Tech, is launching an Energy Management and Reporting course designed specifically for small and medium-sized enterprises (SMEs). The course has been developed in response to a growing challenge: Large corporations increasingly need their suppliers to track and report energy and emissions data, yet many SMEs lack the resources and expertise to do so.

acsb@scheller.gatech.edu

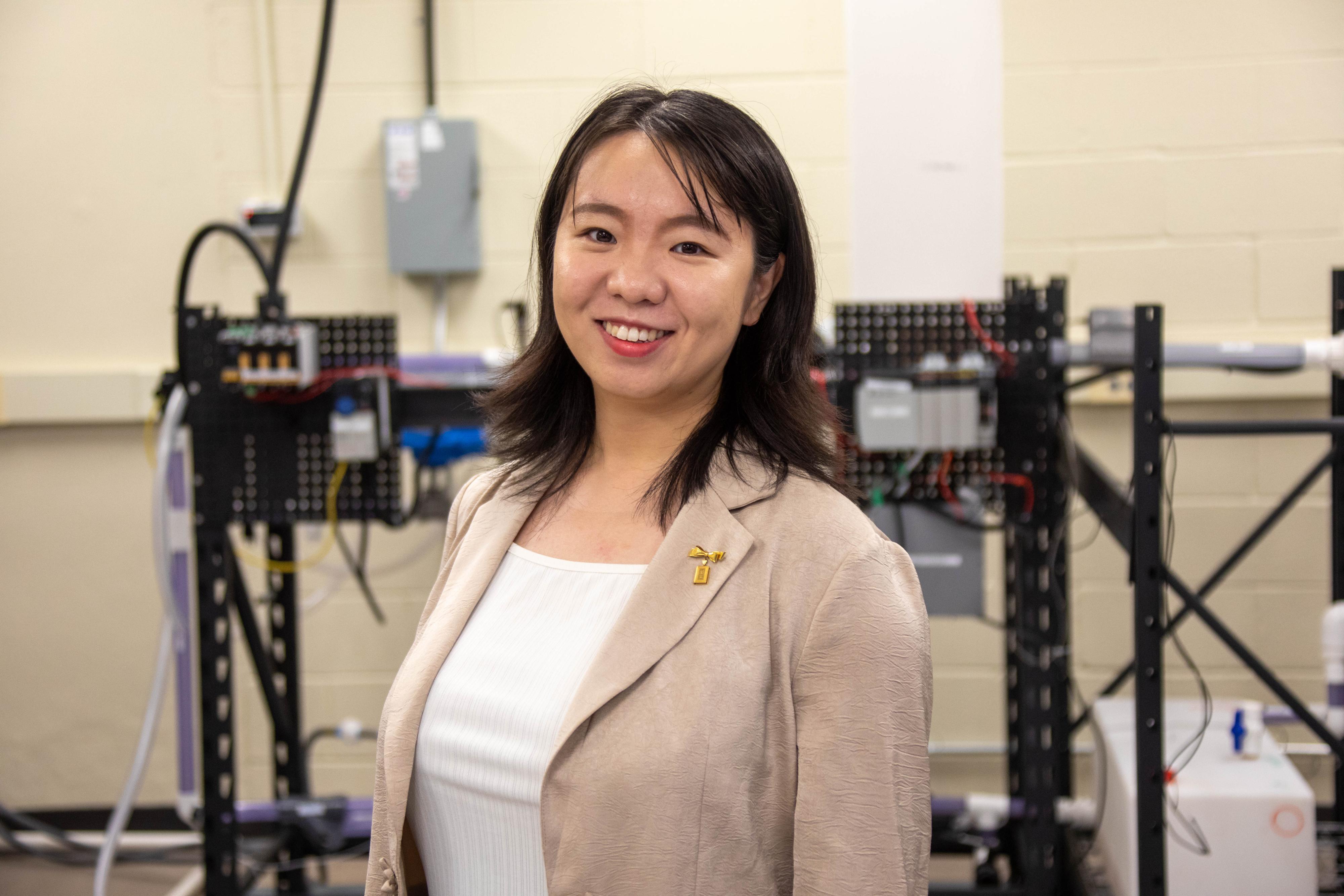

Fan Zhang Named to American Nuclear Society’s 40 Under 40 List

Nov 10, 2025 —

Fan Zhang, Assistant Professor of Mechanical Engineering at Georgia Tech

Fan Zhang, an assistant professor in the George W. Woodruff School of Mechanical Engineering’s Nuclear and Radiological Engineering and Medical Physics (NREMP) program, has been named to the American Nuclear Society’s (ANS) 40 Under 40 list.

The list, published in the November issue of Nuclear News magazine, recognizes early career professionals who have made significant contributions to the nuclear field and are poised to shape its future. The 40 honorees are featured in a special section highlighting their accomplishments, leadership, and impact on the industry.

Zhang said the ANS recognition is both meaningful and motivating.

“It’s a humbling reminder that the work I’m passionate about—making nuclear systems safer, more efficient, and more secure—matters to the broader community,” she said. “It motivates me to give back and keep mentoring and inspiring the next generation and make a global impact.”

Zhang directs the Intelligence for Advanced Nuclear (iFAN) Lab, where her research primarily focuses on nuclear cybersecurity, robotics, anomaly detection, digital twin, machine learning and artificial intelligence.

“We create solutions to make nuclear systems safer, more efficient and secure,” she said.

Tracie Troha

Communications Officer, Georgia Tech

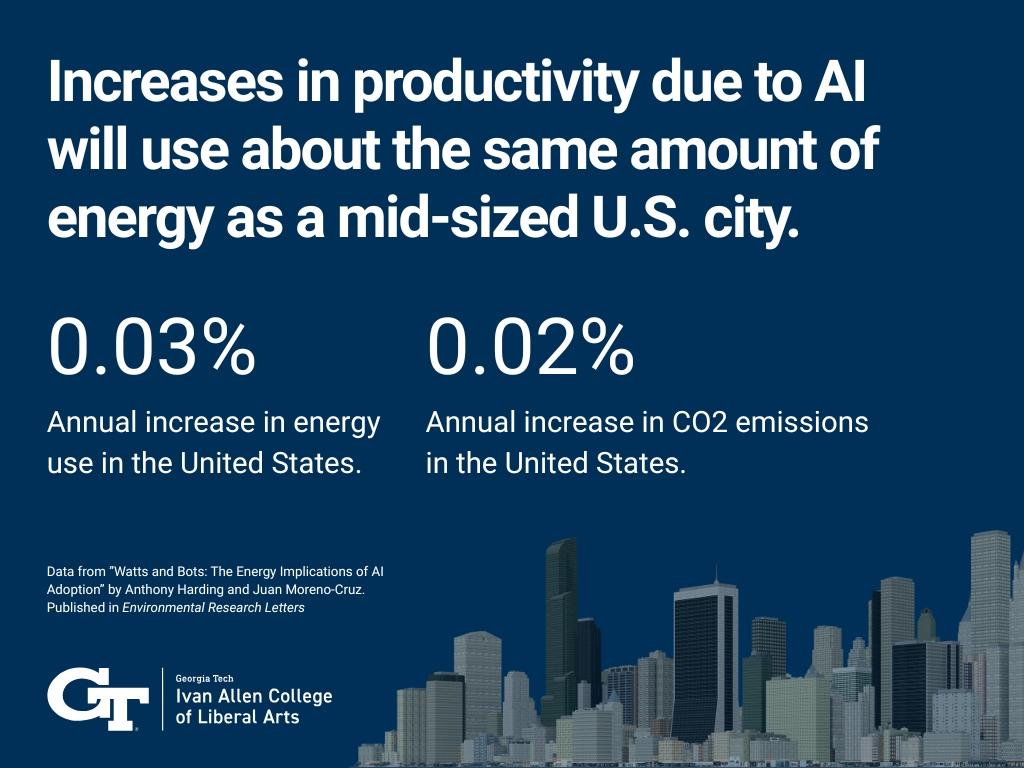

AI Increases Productivity, And That Comes With Energy Costs

Nov 13, 2025 —

Artificial intelligence doesn’t just consume energy via data centers and hardware. It also increases productivity, which comes with its own energy and emissions costs.

A new study from Georgia Tech’s Jimmy and Rosalynn Carter School of Public Policy is one of the first to estimate how changes in productivity due to AI will affect energy consumption.

The paper, written by Anthony Harding and co-author Juan Moreno-Cruz at the University of Waterloo, suggests that greater productivity due to AI will result in a 0.03% annual increase in energy use in the United States and a 0.02% increase in CO2 emissions. That’s about equal to the yearly electricity use of a mid-sized U.S. city.

“If AI is as transformational as some expect it to be, it makes it even more important to think about the knock-on effects throughout the economy, beyond just the demands of the technology itself,” Harding said. “U.S. energy demand has stabilized since the mid-2000s. There is potential for AI to disrupt this, but there is also large uncertainty.”

James G. Campbell Fellowship and Spark Award Winners Announced

Nov 01, 2025 —

From the Left: Anna Raymaker, Talia Thomas, John Kim, Kristian Lockyear, Daksh Adhikari, Alex Magalhaes, and Douglas Lars Nelson.

The Strategic Energy Institute and the Energy, Policy, and Innovation Center at the Georgia Institute of Technology have announced the recipients of this year’s James G. Campbell Fellowship and Spark Awards.

Kristian Lockyear, a doctoral student in the Sustainable Systems Thermal Lab, received the Campbell Fellowship, which recognizes a Georgia Tech graduate student conducting outstanding research in renewable energy systems. Candidates are nominated by their advisors for exceptional academic achievement in the field.

Lockyear’s research, advised by Professor Srinivas Garimella in the George W. Woodruff School of Mechanical Engineering, centers on developing a biomass-powered adsorption cooling system to address food supply shortages in the cold chain and enable vaccine delivery to remote regions. He also holds a bachelor’s degree in chemical and biomolecular engineering from Georgia Tech and is committed to advancing sustainable cooling technologies that improve access in developing areas and promote global energy equity.

The Spark Award honors Georgia Tech graduate students who have demonstrated exceptional leadership in advancing student engagement with energy research, along with a strong record of service and broader impact. This year’s recipients are Daksh Adhikari, John Kim, Douglas Lars Nelson, Alex Magalhaes, Anna Raymaker, and Talia Thomas. “This year saw one of the largest pools of applications for the annual awards,” said Jordann Britt, SEI’s program coordinator, who led the selection process. “Awardees were thoughtfully chosen based on research excellence, a strong record of service, and projects demonstrating broader impact on advancing renewable energy. Through these scholarships, we hope to encourage and support students as they grow into future leaders in the energy industry.”

Daksh Adhikari is a second-year doctoral student in mechanical engineering working in the MiNDS Lab. His research focuses on increasing the adoption of two-phase thermal management techniques in artificial intelligence data centers to reduce water consumption. Adhikari is developing machine learning-based control systems to manage the unstable regions inherent in two-phase cooling processes. Outside of the lab, he enjoys playing guitar and exploring scientific topics related to space.

John Kim is a doctoral candidate in public policy, advised by Professor Daniel Matisoff. His research examines the distributional effects of environmental and energy infrastructure challenges, with a focus on grid resilience, public safety, and environmental justice. Kim’s broader research agenda includes analyzing inequities in power grid restoration, the economic impacts of EPA Superfund cleanups, and the socioeconomic drivers of electric vehicle adoption.

Douglas Lars Nelson is a fifth-year doctoral candidate at the School of Materials Science and Engineering, advised by Professor Matthew McDowell. His research uses advanced characterization techniques to quantify degradation in next-generation battery materials, contributing to the development of safer, high-energy batteries. Nelson earned his undergraduate degree in materials science and engineering from Clemson University.

Alex Magalhaes is a master’s student in computational science and engineering, advised by Professor Qi Tang. His research centers on developing scalable, high-fidelity numerical algorithms to simulate plasma confinement and equilibrium in nuclear fusion reactors. Magalhaes holds a bachelor’s degree in physics from Wesleyan University and previously worked as a data scientist at Quantiphi. He plans to pursue a doctorate in computational plasma physics. In his free time, he enjoys rock climbing, which he’s done at Yosemite and Grand Teton National Park.

Anna Raymaker is a doctoral student in the School of Electrical and Computer Engineering, advised by Professor Saman Zonouz. Her research focuses on securing critical infrastructure by identifying and mitigating cyber risks in systems, such as maritime networks and distributed energy resources. Raymaker leads a U.S. Department of Energy-aligned initiative to locate exposed solar inverters worldwide and assess their impact on operational power grids. She currently serves as president of the Graduate Student Association for the School of Cybersecurity and Privacy.

Talia Thomas is a doctoral candidate in mechanical engineering working in the McDowell Lab. Her research focuses on sustainable carbon materials for next-generation lithium- and sodium-ion batteries by using biomass precursors such as lignin and cellulose to develop high-performance anodes. Thomas also integrates life cycle and techno-economic assessments to evaluate scalability and environmental impact. She is an active leader in the graduate community, organizing initiatives that promote inclusion and student engagement. Before graduate school, she worked as a maintenance engineer at Dow and as a chemistry research associate at Zymergen.

Written by: Katie Strickland, SEI.

Priya Devarajan || SEI Communications Program Manager

GTRI ASCCENT AI Security Summit 2025

Check out the ASCCENT webpage for information on the venue, nearby accommodations, and the most up-to-date agenda.

Spotlight on Ann Dunkin, SEI Distinguished External Fellow

Oct 24, 2025 —

Ann Dunkin

Ann Dunkin joined the Georgia Tech Strategic Energy Institute (SEI) as a distinguished external fellow in April. Before that, she served as the chief information officer at the U.S. Department of Energy, where she managed the department’s information technology portfolio and modernization; oversaw its cybersecurity efforts; led technology innovation and digital transformation; and enabled collaboration across the agency. Dunkin also served in former President Barack Obama’s administration as chief information officer of the U.S. Environmental Protection Agency.

Other previous roles include chief strategy and innovation officer at Dell Technologies; chief information officer for the County of Santa Clara, California; chief technology officer for Palo Alto Unified School District in California; and leadership positions at Hewlett Packard focused on engineering, research and development, IT, manufacturing engineering, software quality, and operations.

Dunkin is a published author, most recently of the book Industrial Digital Transformation, and a frequent speaker on topics such as government technology modernization, digital transformation, and organizational development. She received the 2022 Capital CIO Large Enterprise ORBIE Award and has earned numerous honors, including Washington, D.C.’s Top 50 Women in Technology for 2015 and 2016; Computerworld’s Premier 100 Technology Leaders for 2016; StateScoop’s Top 50 Women in Technology list for 2017; FedScoop’s Golden Gov Executive of the Year in 2016 and 2021; and FedScoop’s Best Bosses in Federal IT 2022.

Dunkin holds a master of science degree and a bachelor of industrial engineering degree, both from Georgia Tech. She is a licensed professional engineer in California and Washington state. In 2018, she was inducted into Georgia Tech’s Academy of Distinguished Engineering Alumni.

Below is a short Q&A with Dunkin reflecting on how the Institute influenced her career.

How did your Georgia Tech education shape your approach to leadership and innovation throughout your career?

My Georgia Tech education instilled the core ideas and values that we see in our graduates today, and that made me successful in my career. You can’t graduate from Georgia Tech without learning how to be part of a team and to lead through influence, which may be the hardest part of leadership. It’s far easier, although less effective, to lead through authority. In addition, the concept of grit has informed my approach to my roles — that my team and I will work hard together to find solutions to difficult challenges and that no challenge is too hard if we set our minds to accomplishing it. This may seem like an unusual connection to innovation, but it’s not. A lot of people think that innovation is about a light bulb going off in your head with a great idea. Sure, that happens sometimes. But the idea is only the spark of innovation. Innovation is about the hard work to turn an idea into reality — and that’s why it takes grit. You have to do the work and not be discouraged by setbacks.

What does it mean to you to return to Georgia Tech as a distinguished external fellow?

First, coming back to Georgia Tech feels like the ultimate full circle moment. It’s an honor to be invited back as a distinguished external fellow and a distinguished professor of the practice. It shows that the leadership team at Georgia Tech, one of the best engineering institutions in the world, respects the work that I’ve done in my career. Second, this is an exciting opportunity to shift gears in my career, continue to do interesting work, and contribute at a high level. I’m excited to be here and look forward to what we’re going to accomplish together.

What aspect of your collaboration with the SEI are you most passionate about?

There are so many things that it’s hard to identify just one. The SEI is at the center of the future of energy, working to solve difficult problems to ensure that we have abundant, affordable, clean energy. During my time at the Energy Department, I developed a strong interest in energy technology, including next-generation nuclear, fusion, and battery technologies. I’m also interested in grid resilience, particularly permitting, planning, and cybersecurity. I hope to help the SEI deepen collaboration with the Energy Department’s labs and to engage other partners as well.

How do you see the SEI influencing the energy landscape of our nation?

The SEI has the ability to influence at a level that exceeds its size. It can drive collaboration between Georgia Tech, national labs, and the private sector on critical issues in the energy sector from research to implementation. I like that the SEI embraces its role as a convener, bringing all the parties together to make something happen.

Priya Devarajan || Research Communications Program Manager

Georgia Tech Strategic Energy Institute

Panel: Power and Production: Keeping Electricity Prices Down and the U.S. Economy Running

For two decades, power demand in the US was essentially flat. It is now growing quickly again, as the country builds AI data centers, switches to EVs and electric heaters, seeks to revive domestic manufacturing, and turns up the AC to keep cool as temperatures rise.

Raheem Beyah Named Provost and Executive Vice President for Academic Affairs

Oct 23, 2025 —

Raheem Beyah

Raheem Beyah has been selected as Georgia Tech's next provost and executive vice president for Academic Affairs, beginning Nov. 1.

Beyah has served as the dean of the College of Engineering and Southern Company Chair at Georgia Tech since 2021. Under his leadership, the College has strengthened its national and global reputation for innovation, research excellence, and student success, earning top-10 national rankings across every engineering discipline.

Known for his mentorship and collaborative leadership, Beyah will assume the role of the Institute's chief academic officer — leading and supporting all academic and related units, including the Colleges, the Library, and professional education. He will also oversee academic and budgetary policy and priorities for the Institute.

"Raheem Beyah's commitment to students, faculty, and staff has always been at the heart of his leadership," said Georgia Tech President Ángel Cabrera. "He understands firsthand what they experience — their challenges, aspirations, and the drive that defines a Georgia Tech education. That perspective will make him an outstanding provost and a tremendous partner in advancing Georgia Tech's mission."

An Atlanta native who earned his master's and Ph.D. in electrical and computer engineering from Georgia Tech after completing a bachelor's degree at North Carolina A&T State University, Beyah is recognized as a leading expert in network security and privacy.

"What excites me most about Georgia Tech is how we bring different disciplines together to solve real problems," he said. "Innovation happens when engineers work alongside artists, humanists, and social scientists, connecting technology with purpose and people. As provost, I'm eager to continue building those bridges and supporting the incredible creativity that defines this community."

In 2024, Beyah was named a fellow by the Institute of Electrical and Electronics Engineers (IEEE). It is the highest echelon of membership in IEEE, the world's largest technical professional organization dedicated to "advancing technology for the benefit of humanity." He is a member of the American Association for the Advancement of Science, the American Society for Engineering Education, a lifetime member of the National Society of Black Engineers, and an Association for Computing Machinery distinguished scientist.

Before joining the faculty at Georgia Tech, where he has served in various leadership roles, Beyah was a faculty member in the Department of Computer Science at Georgia State University, a research faculty member with the Georgia Tech Communications Systems Center, and a consultant in Andersen Consulting's (now Accenture) Network Solutions Group.

Regional Roundtable: Securing the Battery and Critical Material Supply Chain in Georgia

Date: November 5 || 9 a.m.

Venue: Georgia Tech Research Administration Building (Dalney)

Register by: Friday, October 31 (Contact Peter Trousdale to register)

New Method Uses Collisions to Break Down Plastic for Sustainable Recycling

Oct 10, 2025 — Atlanta, GA

The high impact between the metal balls in a ball mill reactor and the polymer surface is sufficient to momentarily liquefy the polymer and facilitate chemical reactions.

While plastics help enable modern standards of living, their accumulation in landfills and the overall environment continues to grow as a global concern.

Polyethylene terephthalate (PET) is one of the world’s most widely used plastics, with tens of millions of tons produced annually in the production of bottles, food packaging, and clothing fibers. The durability that makes PET so useful also means that it is more difficult to recycle efficiently.

Now, researchers have developed a method to break down PET using mechanical forces instead of heat or harsh chemicals. Published in the journal Chem, their findings demonstrate how a “mechanochemical” method — chemical reactions driven by mechanical forces such as collisions — can rapidly convert PET back into its basic building blocks, opening a path toward faster, cleaner recycling.

Led by postdoctoral researcher Kinga Gołąbek and Professor Carsten Sievers of Georgia Tech’s School of Chemical and Biomolecular Engineering, the research team hit solid pieces of PET with metal balls with the same force they would experience in a machine called a ball mill. This can make the PET react with other solid chemicals such as sodium hydroxide (NaOH), generating enough energy to break the plastic’s chemical bonds at room temperature, without the need for hazardous solvents.

“We’re showing that mechanical impacts can help decompose plastics into their original molecules in a controllable and efficient way,” Sievers said. “This could transform the recycling of plastics into a more sustainable process.”

Mapping the Impact

In demonstrating the process, the researchers used controlled single-impact experiments along with advanced computer simulations to map how energy from collisions distributes across the plastic and triggers chemical and structural transformations.

These experiments showed changes in structure and chemistry of PET in tiny zones that experience different pressures and heat. By mapping these transformations, the team gained new insights into how mechanical energy can trigger rapid, efficient chemical reactions.

“This understanding could help engineers design industrial-scale recycling systems that are faster, cleaner, and more energy-efficient,” Gołąbek said.

Breaking Down Plastic

Each collision created a tiny crater, with the center absorbing the most energy. In this zone, the plastic stretched, cracked, and even softened slightly, creating ideal conditions for chemical reactions with sodium hydroxide.

High-resolution imaging and spectroscopy revealed that the normally ordered polymer chains became disordered in the crater center, while some chains broke into smaller fragments, increasing the surface area exposed to the reactant. Even without sodium hydroxide, mechanical impact alone caused minor chain breaking, showing that mechanical force itself can trigger chemical change.

The study also showed the importance of the amount of energy delivered by each impact. Low-energy collisions only slightly disturb PET, but stronger impacts cause cracks and plastic deformation, exposing new surfaces that can react with sodium hydroxide for rapid chemical breakdown.

“Understanding this energy threshold allows engineers to optimize mechanochemical recycling, maximizing efficiency while minimizing unnecessary energy use,” Sievers explained.

Closing the Loop on Plastic Waste

These findings point toward a future where plastics can be fully recycled back into their original building blocks, rather than being downcycled or discarded. By harnessing mechanical energy instead of heat or harsh chemicals, recycling could become faster, cleaner, and more energy-efficient.

“This approach could help close the loop on plastic waste,” Sievers said. “We could imagine recycling systems where everyday plastics are processed mechanochemically, giving waste new life repeatedly and reducing environmental impact.”

The team now plans to test real-world waste streams and explore whether similar methods can work for other difficult-to-recycle plastics, bringing mechanochemical recycling closer to industrial use.

“With millions of tons of PET produced every year, improving recycling efficiency could significantly reduce plastic pollution and help protect ecosystems worldwide,” Gołąbek said.

CITATION: Kinga Gołąbek, Yuchen Chang, Lauren R. Mellinger, Mariana V. Rodrigues, Cauê de Souza Coutinho Nogueira, Fabio B. Passos, Yutao Xing, Aline Ribeiro Passos, Mohammed H. Saffarini, Austin B. Isner, David S. Sholl, Carsten Sievers, “Spatially-resolved reaction environments in mechanochemical upcycling of polymers,” Chem, 2025.

Kinga Gołąbek

Prof. Carsten Sievers

Brad Dixon, braddixon@gatech.edu