Georgia Tech’s Rural Computer Science Initiative, a state-supported program, continues to expand its reach and impact across Georgia. Now in its fourth year, the collaborative effort launched by the Center for Education Integrating Science, Mathematics, and Computing (CEISMC) and STEM@GTRI, the K-12 outreach arm of the Georgia Tech Research Institute (GTRI), has grown rapidly for the second year in a row. Participating students increased from 4,400 to more than 10,000 this past academic year.

This fall, five new counties—Baldwin, Coffee, Evans, Hart, and Monroe—joined the initiative, bringing the total to 45 participating districts, with plans to add more districts in the spring. Over 70 teachers have engaged with the initiative through professional development offered at the beginning of each semester and co-teaching opportunities held throughout the year through a hybrid model. Georgia Tech faculty members lead instruction online in partnership with in-person classroom teachers across the state.

“We’re seeing real momentum in our partner schools—computer science curricula are evolving, career pathways are clearly laid out for both teachers and students, and applications to computer science programs, including Georgia Tech, are on the rise,” said CEISMC Executive Director Lizanne DeStefano. “Teachers are advancing more quickly, and our professional development offerings now include cutting-edge topics like artificial intelligence in agriculture and healthcare, as well as unmanned aerial vehicle development to meet local needs.”

For veteran educators such as Jansen Haight, the Rural Computer Science Initiative has shaped his pedagogical approach and strengthened his commitment to making computer science content accessible to all students. He joined the program during its second year when he was a newly minted computer science teacher at Lumpkin County High School. “At that time, I only taught Introduction to Software Technology and Computer Science Principles,” he said. “It was incredibly valuable to collaborate with other teachers like me, to share ideas, and to see how to grow my program.”

Haight, now in his third year with the Rural Computer Science Initiative and teaching computer science full-time, took advantage of every opportunity available to him, including external resources like the GenCyber AGENT Initiative at the University of North Georgia. His involvement with the professional development program for middle and high school educators—focused on cybersecurity and computer science instruction —inspired his next steps.

Haight explained that the curriculum developers in the Rural Computer Science Initiative recognized the absence of a structured cybersecurity unit. So, in his second year, he wrote a grant and collaborated with Bryson Payne, a cybersecurity professor and researcher at the University of North Georgia, to develop one. They adapted the unit this past summer so it could run completely offline for school security purposes and be more accessible to first-year students.

“Our program has expanded from a single introductory class to a full pathway, and I’ve seen more students express interest in computer science careers — particularly in cybersecurity,” Haight said. “The hands-on nature of the Raspberry Pi devices (programmable microcontrollers) and open-source tools has given students a sense of real-world application that motivates them to pursue further study.”

A new area of focus this year is applying computer science and artificial intelligence to agriculture, including farming. Several school districts have been provided with FarmBots, including Twiggs County, which has been a partner since the initiative’s pilot year. A FarmBot is an open-source, automated farming system that integrates coding, robotics, and data science, and can monitor variables such as soil moisture and temperature. One such system was installed in Georgia Tech’s community garden to serve as a test bed for designing the related learning experiences and supporting partner schools in setting up their devices.

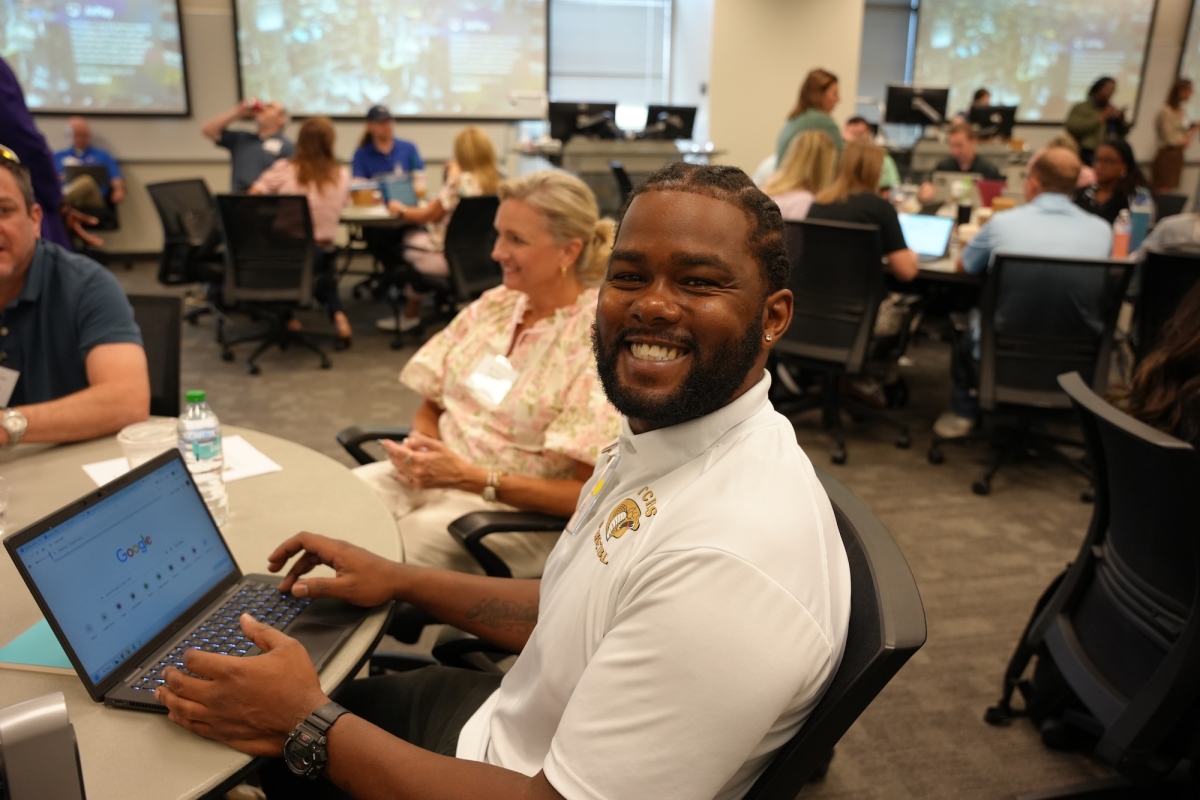

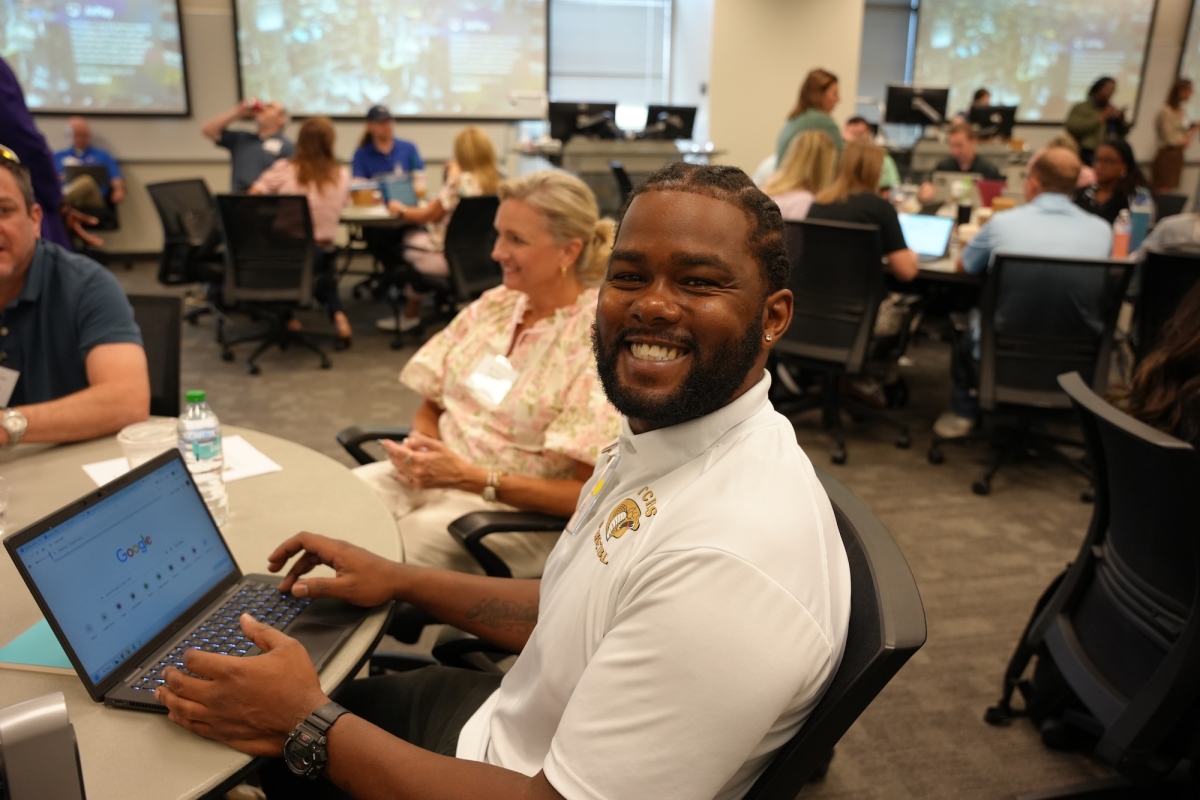

T.S. Whitmore, a new computer science teacher for both the middle and high schools in Twiggs County, explained that the resources provided by the Rural Computer Science Initiative are helping him plan lessons across grade levels. His middle schoolers will be engaged with the cybersecurity unit, while most of the high schoolers have volunteered to assist with the FarmBot project.

“I learned so much in so little time. I have so much to learn, but I've never been more excited,” he said. “I am learning to think outside of the box and find different ways to connect new learning to things previously learned. I expect to be more creative in my lesson planning.”

Newcomer Ella Newsome is also bringing excitement and energy to Oglethorpe County High School as she begins both her teaching career and her participation in the Rural Computer Science Initiative. A recent mathematics graduate from the University of Georgia, she now teaches 10th grade geometry and computer science for grades 9-12.

“It has become very clear that the other teachers in the community as well as the Georgia Tech staff can and want to help me,” Newsome said of her early involvement with the initiative. “They want to see my students succeed and are willing to put much time and effort towards that goal. I feel empowered, supported, and motivated to engage my students with computer science!”

“It's so nice to hear these stories and the enthusiasm about the project as we lift our heads up from the day-to-day implementation and planning details, especially as we have kicked off the new school year with even more districts and schools,” said STEM@GTRI Director Leigh McCook. “It’s a great reminder of what is happening at the school, teacher, and student level as a result of the opportunities this project—and the people behind it—bring.”

In fact, the Rural Computer Science Initiative will be featured at the inaugural Georgia Tech Lifetime Learning Symposium on October 6, hosted by the College of Lifetime Learning of which CEISMC is a foundational unit. The K-12 education community is invited to tune in to the free live stream after 3 p.m., using this link: https://mediaspace.gatech.edu/media/1_hzdv0u71, to view Dean William Gaudelli’s keynote address, followed by a panel discussion of the initiative. Registration is not required

“The Rural Computer Science collaboration between CEISMC and STEM@GTRI is an important and valued connection between the Institute and the state of Georgia,” Gaudelli said, reflecting on the initiative’s growing impact and its role in advancing STEM education statewide. “Teachers and administrators coming together to learn at the cutting edge of STEM pedagogy is a great example of what lifetime learning is all about. Georgia Tech expertise, coupled with a forum for knowledge creation and teacher growth, is a powerful combination. I am excited to see what comes next in this significant area of work at Georgia Tech.”

—Joëlle Walls, CEISMC Communications

Rural CS teacher Jansen Haight with GTRI's Elizabeth Parrish at fall 2024 kickoff.

Rural CS teacher T.S. Whitmore at fall 2025 kickoff.

Rural CS teacher Ella Newsome at fall 2025 kickoff.