Electing to have invasive brain surgery isn’t something most people have done. Ian Burkhart isn’t most people.

“When I finished rehabilitation, my doctors and therapist and, most importantly, the insurance company said, ‘For someone with your condition, we feel like you've made all the improvement that you will, have a nice life,’” said Burkhart, who was left with limited feeling and mobility below the neck after a 2010 diving accident injured his spinal cord. “That didn't sit well with me.”

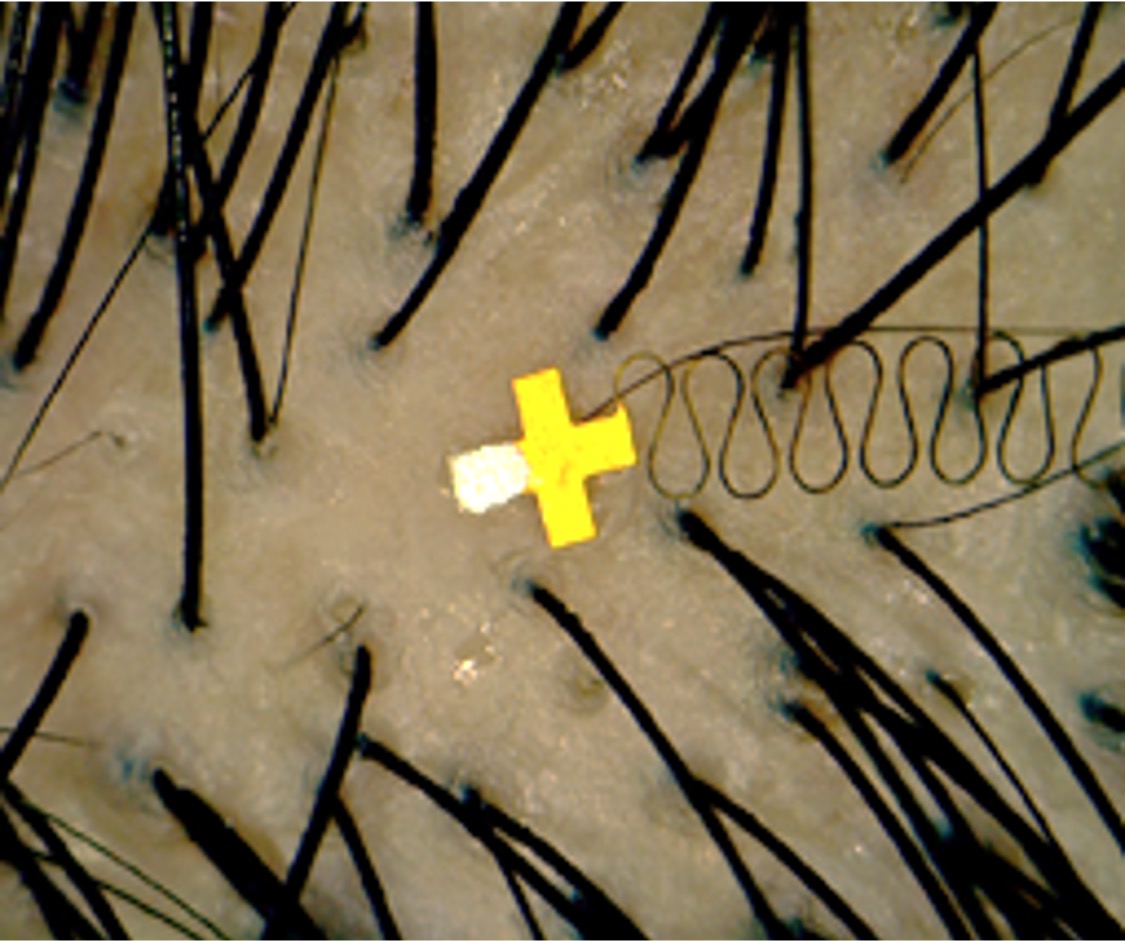

Hoping even a fraction of hand mobility would increase his independence, Burkhart turned to a clinical research trial on a brain-computer interface (BCI) designed to detect movement signals in the brain and send them to a computer to stimulate the arm muscles, bypassing the spinal cord in the hopes of restoring movement.

“I had had four and a half years of never thinking my hand was going to move again,” he recalled. When testing to see if he qualified for the study, researchers stimulated his hand muscles. “I saw my hand move, and that was all I needed to know — I was ready to risk it all for something that may or may not work.”

Burkhart’s story is one of many that reveal the deeply personal side of neurotechnology research. Centering lived experiences like his is central to the mission of the Institute for Neuroscience, Neurotechnology, and Society (INNS), a new Interdisciplinary Research Institute launching this July at Georgia Tech.

“If we want to build neurotechnology that truly serves people, their voices should be part of the scientific process from the very beginning,” said Chris Rozell, a professor in the School of Electrical and Computer Engineering and one of the many researchers at Georgia Tech working to understand and advance BCIs. “Hearing from individuals who live with these devices helps guide more ethical, inclusive, and effective research. The entire field benefits from inclusive conversations like these.”

Life With a Brain Implant

Burkhart and three others recently shared their stories live on the Ferst Center stage at “Wired Lives: Personal Stories of Brain-Computer Interfaces, an event organized by Georgia Tech’s Neuro Next Initiative. Their stories gave over 200 attendees a rare, honest glimpse into the realities of neurological conditions and the path to brain-computer interface research.

“I was at a crossroads in my life at 47 years old,” said Brandan Mehaffie, who told his story of living with early-onset Parkinson’s disease. “I was trying to figure out, do I continue with the status quo and watch my career dwindle into nothing? Watch my life with my family, my kids, not being able to go on hikes or family vacations?”

Mehaffie eventually qualified for deep brain stimulation (DBS) treatment, a procedure where a pacemaker-like device is implanted into the brain to provide electrical stimulation. “It changed my life for the better in ways that I can't even tell you.”

When former U.S. Air Force Sgt. Jennifer Walden’s doctor told her about a clinical trial testing DBS as an epilepsy treatment, she jumped at the chance. “The 48 hours after those seizures are 48 hours where you don't want to live anymore.” Walden explained that her response to medication had dwindled after years of traditional treatment, increasing the frequency and severity of her seizures. “I feared suicide. It's something I didn't want to do, but if something happened in those 48 hours to end my life, I didn't care,” she said.

“I am now probably 99% seizure-free,” she beamed as she recalled her response to DBS on stage. “I don't know how I got so lucky in life, but I don't take it for granted.”

Common themes in their stories were resilience, hope, and a deep desire to give back.

“When I joined the study, it had no physical benefit to me, but that's not why I joined it,” said Scott Imbrie, who experienced a major spinal cord injury and participates in a clinical BCI study at the University of Chicago. “I decided to have invasive brain surgery and have electrodes implanted on my brain to help other people.”

A New Approach to Interdisciplinary Research

Timed alongside the InterfaceNeuro conference at Georgia Tech, the gathering offered a rare opportunity for scientists, engineers, and clinicians to engage directly with the lived experiences of individuals using brain-computer interfaces — a perspective often missing from traditional research settings.

“It makes you think about how we ethically conduct research and how we recruit and interface with patients,” said Eric Cole, a postdoctoral researcher at Emory University, who was reminded that many patients participating in BCI research have been on a long, difficult journey before interacting with researchers. “We should remember to take their experiences seriously and respect them. They're giving up something for research — that part we should always remember.”

“Wired Lives” was one in a series of events highlighting the lived experience of individuals with neurological conditions organized by the Neuro Next Initiative, which has served as the precursor to INNS.

“A core mission of INNS is to consider how neuroscience and neurotechnology impact people’s lives,” said Jennifer Singh, associate professor in the School of History and Sociology, a member of NNI’s executive committee, and a co-organizer of the event. “Their stories matter when it comes to the types of science and technology we pursue and how they benefit the human condition. Many scientists and engineers may never encounter people living with neurological conditions outside of events like this. That will be a priority for INNS — to bring the expertise of lived experiences to the research process.”

Ian Burkhart’s lived experience reminded the audience that not every clinical trial has a happy ending. His BCI was ultimately removed after seven years as research funding ran short, taking his newly improved hand mobility with it. Despite this, Burkhart remained positive.

“I'm so glad I was able to take that risk and have that voluntary brain surgery and participate in this type of research because it's defined my life.” Burkhart went on to found the BCI Pioneers Coalition and his own nonprofit because of his research participation. “It gave me a lot of hope for the future, and a lot of hope that these types of devices are going to be able to help people and improve their quality of life.”

This event was produced in partnership with The Story Collider and made possible through support from Blackrock Neurotech and Medtronic.

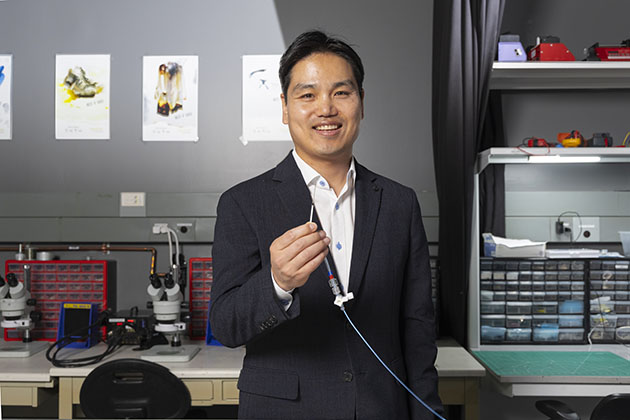

Brandan Mehaffie shares how deep brain stimulation transformed his life after an early-onset Parkinson’s diagnosis. Photo: Chris McKenney

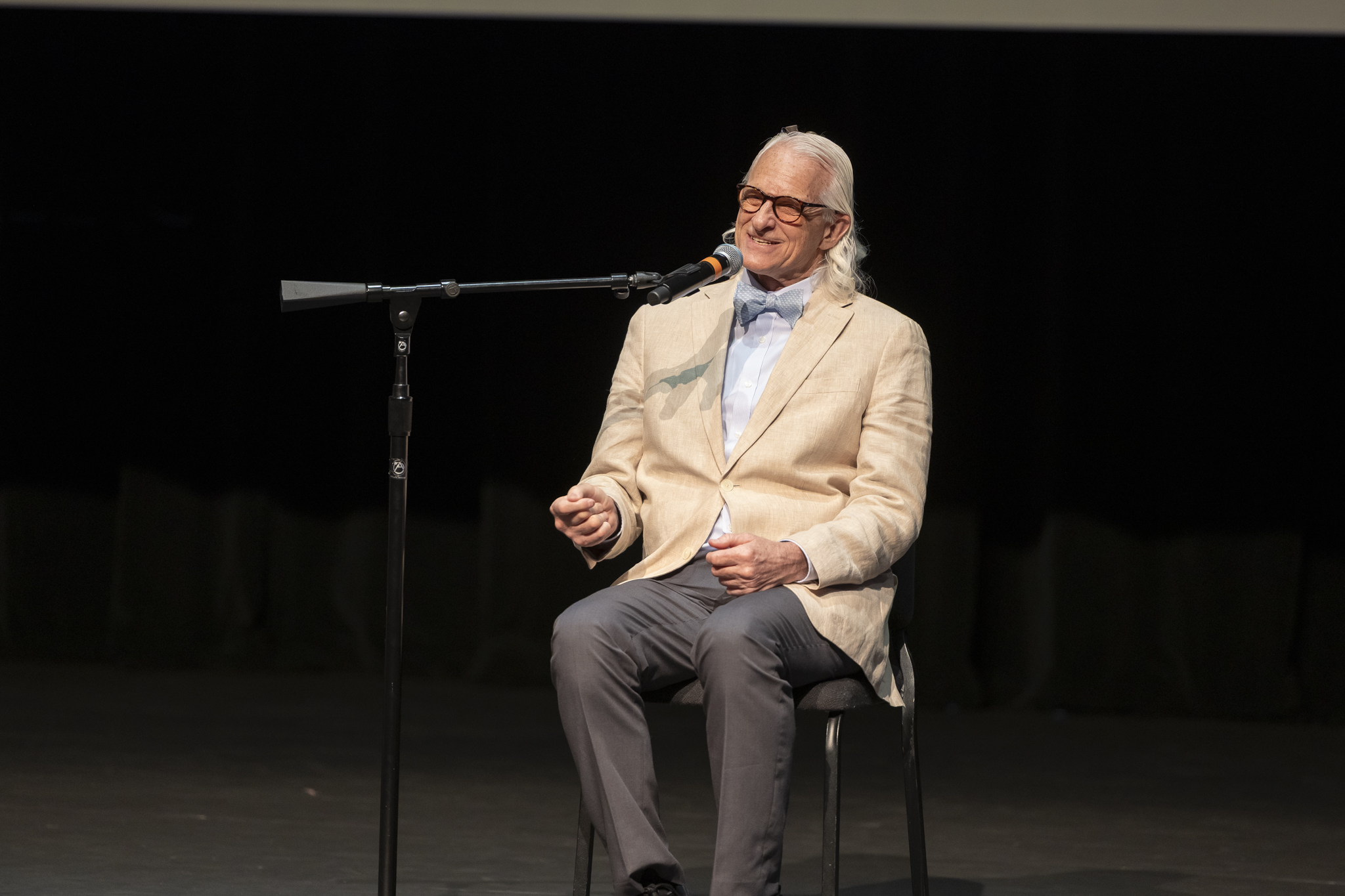

Jennifer Walden reflects on the emotional and physical challenges of epilepsy — and the relief that came with a breakthrough treatment. Photo: Chris McKenney

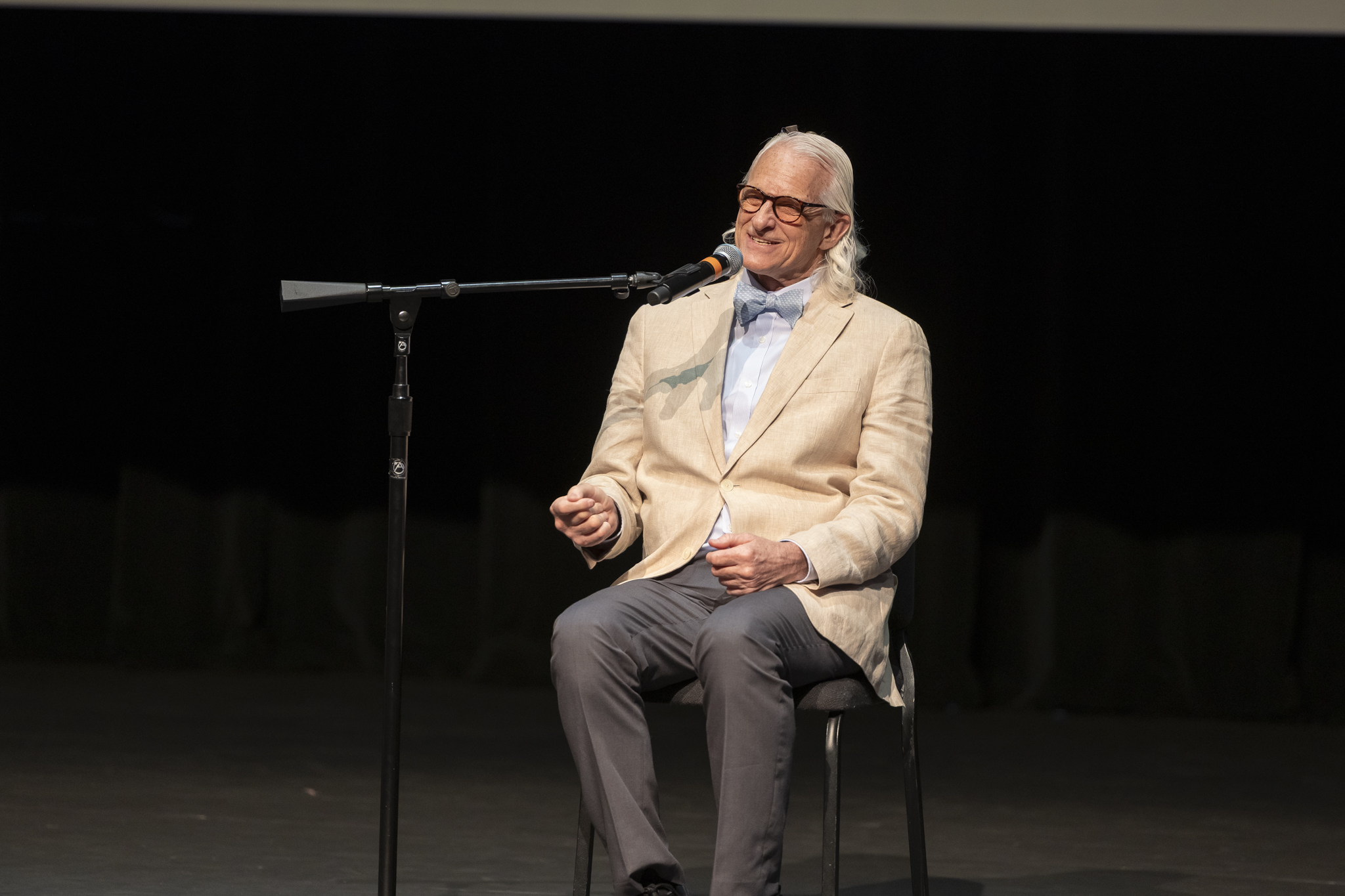

Scott Imbrie shares his decision to undergo brain surgery — not for personal benefit, but to advance research that could help others. Photo: Chris McKenney

Storytellers, event organizers, and sponsor representatives at "Wired Lives."

Researchers, students, and community members came together to explore the lived experiences behind cutting-edge neurotechnology. Photo: Chris McKenney