Advancing Neonatal Health Monitoring in Ethiopia

Dec 08, 2025 — Atlanta, GA

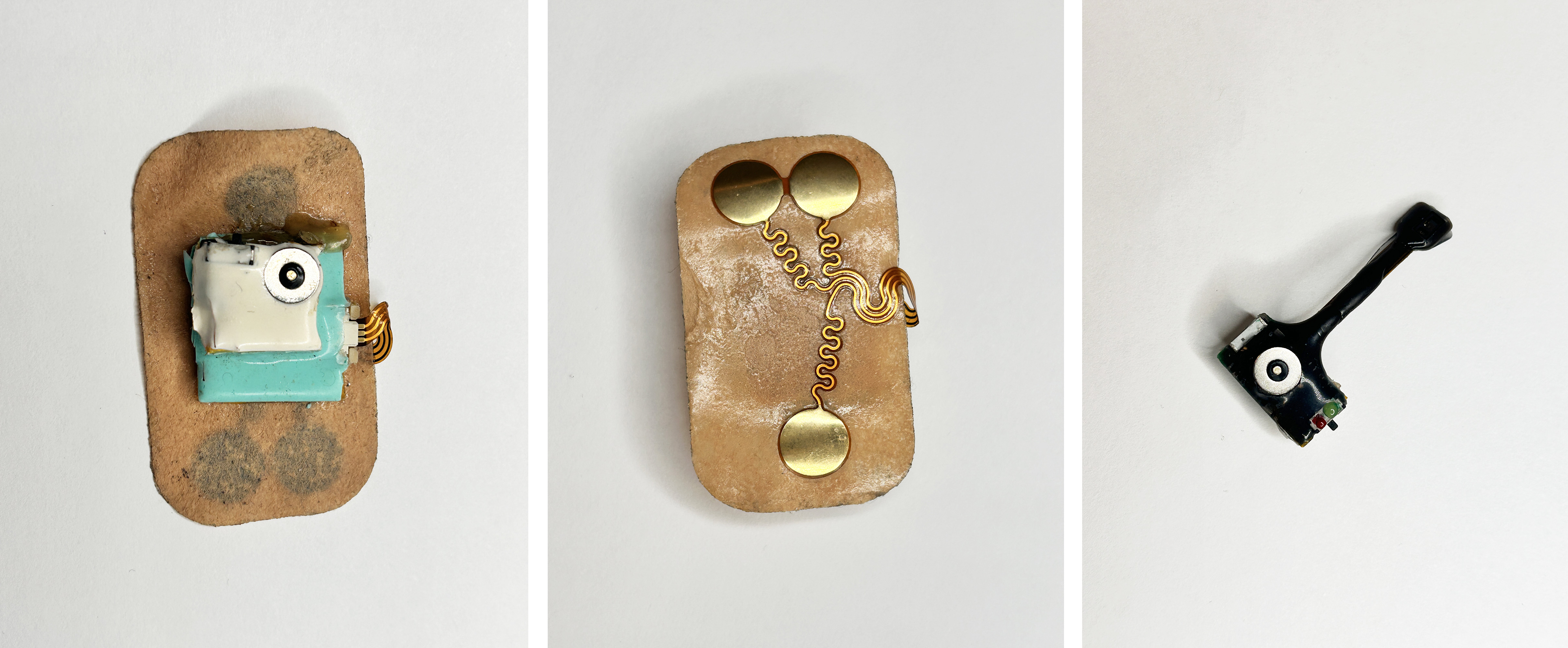

Wearable chest-mounted patch and forehead-mounted pulse oximeter shown on a mannequin baby for illustration

Soft, wearable system offers continuous wireless monitoring of newborns’ health.

A new, soft, all-in-one, wearable system has been designed for continuous wireless monitoring of neonatal health in low-resource settings. Developed by Georgia Tech researchers using advanced packaging technologies, the system features a chest-mounted patch and a forehead-mounted pulse oximeter that transmits real-time data to a smartphone app.

The wearable device measures and records important clinical parameters such as heart rate, respiration rate, temperature, electrocardiograms, and blood oxygen saturation. Speedy detection of abnormal readings in resource-challenged neonatal units could significantly reduce newborn mortality rates.

The device’s pilot study, conducted at Tikur Anbessa Specialized Hospital (TASH) in Addis Ababa, in collaboration with Abebaw Fekadu, Ph.D., from the Centre for Innovative Drug Development and Therapeutic Trials for Africa (CDT Africa Inc.), and neonatologist Asrat Demtse, M.D., from the TASH department of pediatrics, demonstrated a significant improvement over current vital sign monitoring and recording methods by providing continuous oversight using less medical equipment while also reducing handwritten paper tracking. Vital signs are a group of the most crucial medical data that indicate the status of the body's life-sustaining functions. The pairing of this wearable system with a smartphone app automated the monitoring process and delivered a superior level of neonatal care compared to the current processes at Ethiopia’s best hospital.

Medical staff and parents also observed a reduced need to wake their babies when using the wearable monitoring system. In addition, after participating in the study, 84% of Ethiopian parents said they would use the device at home.

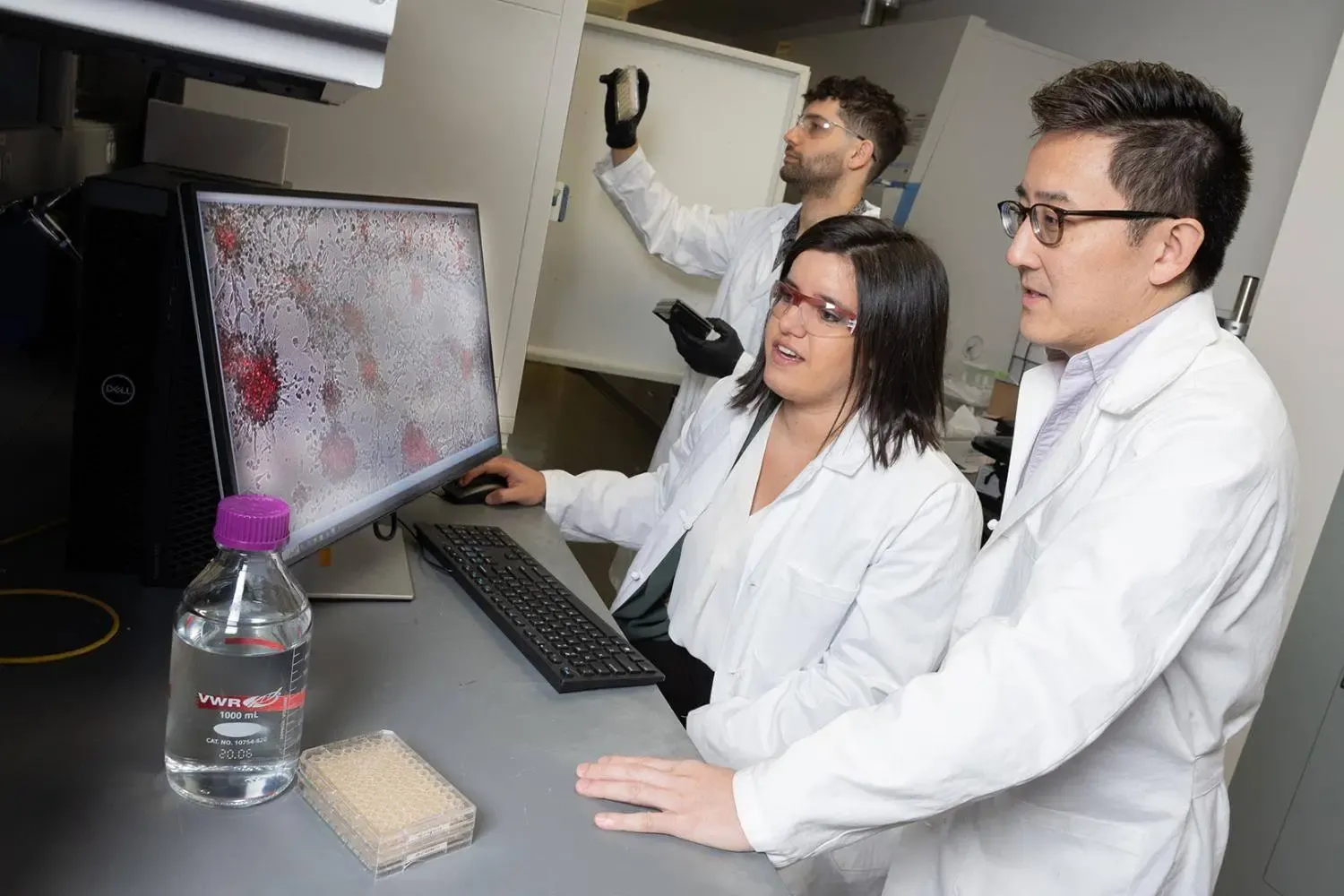

“Professor Hong Yeo and I connected immediately after he gave a brief research talk about a new, wearable cardiac monitor for children,” said Rudy Gleason. “I asked him if we could co-develop a wearable device for newborn babies in Ethiopia that measured not one, but a variety of vital signs. We both thought it was a great idea.”

Yeo and Gleason are faculty members in the George W. Woodruff School of Mechanical Engineering and the Wallace H. Coulter Department of Biomedical Engineering at Georgia Tech. And both are affiliated with Georgia Tech’s Institute for People and Technology, which seeks to improve global health.

In 2009, Gleason and his wife were in the process of adopting a baby from Ethiopia named Kennedy. Before they could bring her home, however, she died — the result, Gleason said, of a seemingly preventable combination of malnutrition and diarrhea.

“This loss redirected my academic teaching, research, and service activities at Georgia Tech,” said Gleason. “Since then, I’ve spent most of my career focused on developing resource-appropriate biomedical devices to reduce maternal and child mortality.”

“When we started this latest study, Ethiopian parents were reluctant to participate. But once we recruited a few mothers in the neonatal intensive care unit (NICU), everyone in the NICU community wanted their child to participate in our wearable health monitoring system.”

According to Yeo, “We designed the wearable patch as a safe, clinical-grade solution with minimal skin irritation. Its key design advantage lies in the use of nanomembranes, which allows the device to be soft and highly conformal to the baby's skin. Wearing the device helps to ensure critical events are not missed since the built-in automation acts as a force multiplier, freeing clinical staff to focus more on complex decision-making rather than manual data acquisition.”

“Rudy has a deep love for the people of Ethiopia. I feel fortunate to have met him as we embark on this project aimed at helping sick babies in the country. Without his support, I could not envision bringing this technology to Ethiopia,” said Yeo.

During the past decade, child mortality rates have decreased in Ethiopia, but newborn deaths have remained mostly unchanged. Both Yeo and Gleason feel their new wearable neonatal device could significantly lower mortality rates for newborns in Ethiopia as they advance this research.

Citation: Zhou, L., Joseph, M., Lee, Y.J. et al. Soft, all-in-one, nanomembrane wearable system for advancing neonatal health monitoring in Ethiopia. npj Digit. Med. 8, 575 (2025).

DOI: https://doi.org/10.1038/s41746-025-01974-8

Funding: Gates Foundation (INV-006189) and the National Institutes of Health (R01HD100635). This work was also supported by the Imlay Foundation—Innovation Fund.

Wearable chest-mounted patch and forehead-mounted pulse oximeter shown close-up

Professor Rudy Gleason with baby and parents at a hospital in Ethiopia

Professors Hong Yeo and Rudy Gleason

Walter Rich

Research Communications

Artist-in-Residence Program Bridges Art and Technology Through Immersive Performance

Dec 05, 2025 — Atlanta, Ga.

Corian Ellisor performs at the Goat Farm Arts Center, November 23.

Georgia Tech’s Institute for People and Technology (IPaT) artist-in-residence program recently concluded a new collaboration with Corian Ellisor, a distinguished educator and performer in concert dance and theater. The residency explored the intersection of art and technology, resulting in an innovative, multi-layered experience that invited audiences to engage with themes of joy, peace, and community.

The project began when Clint Zeagler, principal research scientist and IPaT’s director of strategic partnerships, invited Ellisor to “think big” and imagine how technology could amplify his artistic vision. “This was definitely a moment for me to step out of my comfort zone and to think on a bigger scale,” said Ellisor. “Coming from a poor artist background, we’re always just struggling to make anything. This was an opportunity to dream.”

“Artist residencies within Georgia Tech’s research centers and interdisciplinary research institutes help to drive innovation in our research enterprise, to discover new applications of our research within the arts, to build strong connections with community partners, and — most important of all — to create impactful new works of art,” said Jason Freeman, associate vice provost for the arts at Georgia Tech. “IPaT has long been at the forefront of GT’s initiatives to collaborate with Atlanta-area artists. I am thrilled to see the success of this latest collaboration between Clint Zeagler and Corian Ellisor.”

Ellisor, an Atlanta-based performance artist with a focus on dance theater, was selected as the IPaT’s 2025 artist-in-residence. Ellisor has worked with arts communities locally and internationally including Georgia, Texas, Florida, Massachusetts, Washington DC, New York, Guatemala, Sweden, The Netherlands, Germany and The United Kingdom. He was awarded the choreography award at the University of Houston, The Walthall Fellowship through WonderRoot, “Top 20 people to watch in 2013" by Atlanta’s Creative loafing, an Atlanta Beltline Grant in 2014, an artist in residency award with the Lucky Penny in 2015, and the Best Choreography Award at the Houston Fringe Festival in 2019.

World Building Meets Performance Art

Ellisor’s concept centered on world building, a technique often used in gaming but adapted here for live performance. The goal was to create an immersive environment where audiences could interact and react, while maintaining an uplifting aesthetic. “I wanted something that leaves the audience feeling good—something hopeful,” Ellisor explained. To develop the project, Ellisor and Zeagler hosted workshops with Georgia Tech students and community members, encouraging free-form creation and dialogue around the question: How do people find joy and peace in a chaotic world? Three teams of Georgia Tech undergraduate students were assigned to collaborate with Ellisor and make an avatar of him. The first team was assigned to reproduce Ellisor’s voice. The second team was assigned to generate a visual likeness of Ellisor. The third team worked on the outside aesthetics of a story booth.

The Story Booth: Technology Meets Emotion

A highlight of the residency was the Story Booth, a tech-enabled installation designed to collect personal narratives about joy and solace. Outfitted with full-body scans and voice capture, the booth featured a digital representation of Ellisor and used sentiment analysis to translate stories into color projections. “If someone shared something happy, the booth glowed orange; if it was sentimental, it turned blue,” Ellisor noted. These dynamic visuals illuminated both the booth and its surroundings, creating a striking display of emotion through light.

An Hour of Galleries Time

The residency culminated in “An Hour of Galleries Time,” an event combining video installations, interactive storytelling, and live dance performances. Dancers engaged with projected visuals before joining together for a collective performance against a massive, illuminated backdrop—transforming the space into a living canvas of movement and light. The interactive performance was held November 23 at the Goat Farm Arts Center, a visual and performing arts center housed in a 19th-century complex of industrial buildings in west midtown Atlanta.

Reflections on Collaboration

Ellisor described the experience as transformative, “I am very happy to have met this community of technologists that I would have never met because our worlds just do not cross at all. Another enlightening experience was trusting myself and trusting the vision—and then letting other people do what they’re supposed to do. Usually as an artist, we are sort of a solo factory. But having the trust in other people to make your vision happen—and it happening—was a really lovely experience.” He added, “I am very grateful to have gone through this with Georgia Tech. There are some tech folks there that were very happy about the final product, which makes me happy.”

Corian Ellisor and fellow dance artists at the Goat Farm Arts Center event.

Walter Rich

Team Revive & Survive Wins Convergence Innovation Competition in Asia

Dec 05, 2025 — Atlanta, Ga.

Pictured: CIC winning student team Revive & Survive from Waseda University, International Christian University, and Keio University in Japan. Along with other participants and organizers of the competition.

Student team Revive & Survive from Waseda University, International Christian University, and Keio University in Japan won the Georgia Tech Institute for People and Technology’s (IPaT) Convergence Innovation Competition (CIC) held in Kuala Lumpur, Malaysia, December 1, 2025. This was the second time the contest was held in Asia—the contest was originally started in 2007 at Georgia Tech.

The winning team members were Taiga Cogger, Ryuichiro Go, Kokoro Cogger, and Taiyo Mitsuoka. The team won $2,000 dollars. The team’s faculty sponsor was Kiichiro DeLuca, a faculty member at Waseda University and partner at WERU Investment, a global early-stage venture capital firm based in Tokyo.

As the winner, the Revive & Survive student team is also invited to be part of Georgia Tech’s Create-X startup launch in summer 2026 as well as Georgia Tech’s Demo Day, August 2026, in Atlanta. Some travel support for the Atlanta trip will be provided.

Revive & Survive’s project empowers communities through regional revitalization and disaster preparedness for a more resilient and sustainable future.

CIC is a competition recognizing student innovation and entrepreneurship responding to today’s global challenges and opportunities. Founded in 2007 in Atlanta, Georgia, CIC is organized by IPaT at the Georgia Institute of Technology.

For the 2025-2026 final pitches and award ceremony, the competition landed in Kaula Lampur, Malaysia. The competition focused on student teams from China, India, Japan, Malaysia, the Philippines, Taiwan, Thailand, and Vietnam. Each year, organizers and participants forge new partnerships and foster more collaborations across the Asian continent. IPaT’s CIC Asia Faculty Fellows help cultivate those team projects and the students showcase their innovative ideas during the competition.

“The CIC students, the support of the faculty fellows, the final competition presentations, and the invited industry forum combine to create a special and unique event,” said IPaT executive director Michael Best. “All of the student finalist projects represented the very best in people-centered technologies responding to global challenges.”

CIC Asia is distinct in how it brings teams from multiple countries together to interact and network. Most innovation competitions are single university or country.

The four runner-up finalist teams each received $1,000 dollars in prize money. The CIC Asia runner-up team projects and team members are listed below:

- ChiliCare is an IoT and AI farming app with auto watering, pest detection, microclimate insights, crop plotting, and smart fertilizer guidance. Team Members: Muhammad Haizad bin Murad, Hafiy Azfar bin Mohd Masri, Hazriq Haykal Norrol Farhan, Muhammad Naim bin Mazni. Faculty Fellow: Dr. Masrah Azrifah Azmi Murad. Mentor: Dr. Azrina binti Kamaruddin. University: Universiti Putra Malaysia.

- PlaySpot makes booking sports facilities in the Philippines simple, and accessible for everyone. Team Members: Louie Gee G. Cabagay, Alwin Matthew T. Chiong, Daniel Justine R. Jadman, Raphael Luis T. Malolos. Faculty Fellow: Mr. Paulo Luis T. Lozano. University: De La Salle University (The Philippines).

- CityFix is a mobile and web platform enabling citizens to quickly report and track municipal issues with GPS, photos, and real-time updates. Team Member: Ng Jia Hong. Faculty Fellow: Ms. Putri Syaidatul Akma Binti Mohd Azmi. University: Multimedia University (Malaysia).

- Flow Vending Machine proposed having vending machines which dispense biodegradable pads installed around campus toilets to help women to have easy access to sanitary pads. Team Members: Ava Jeslina binti Mohd Jamil, Abigail Siew Kar Yan, Ashley Shakyna, Geneve Tsen Fan Qin. Faculty Fellow: Ms. Putri Syaidatul Akma, J.D. Mentor: Ms. Raja Razana Bt Raja Razali. University: Multimedia University (Malaysia).

Future Tech Forum

The CIC event took place alongside the Future Tech Forum which was also organized by IPaT. The forum focused on innovations, opportunities, and advancements associated with human-centered AI, sustainable data centers, and digital trust and security. Expert panels and speakers from across Asia and Georgia Tech discussed the state of art in a rapidly changing world, with particular attention to what it means for Asian nations. The event was invitation only and limited to 150 attendees of established leaders and emerging innovators.

Participating technology speakers and panelists included:

- Honorable YB Tuan Gobind Singh Deo, Minister, Ministry of Digital, Malaysia

- Chee Mun Foong, CEO, YTL AI Labs; and CPO, Ryt Bank

- Chen Change Loy, President's Chair Professor, CCDS, NTU; Director, MMLab@NTU; and Co-Associate Director, S-Lab

- John Lim Ji Xiong, Chief Digital Officer, GAMUDA

- Henry Yang, CMO, Manus

- Ding Wang, Senior Researcher, Responsible AI, Google Research

- Benjamin Croc, CEO, BrioHR

- Tzu Kit Chan, Artificial General Intelligence (AGI), Risks and Safety Advisor of Top Universities in the USA, Singapore, Canada, and France

- Hari Krishnan, Co-founder and CEO of Genie Health

- Benoit Dubeau, Energy Strategy Manager, APAC, Amazon Web Services (AWS)

- Cindy Lin, Professor, School of Interactive Computing, Georgia Tech

- Ko Chuan Zhen, Group CEO & Co-Founder, Plus Xnergy, and Executive Director, BM Greentech

- Zachary Loh, Market Development Manager, Hydroleap

- Nge Foong Kheng, Engineering Manager, APAC, Global Switch

- Verghese Jacob, SVP Technology, DayOne

A photo album of the CIC and Future Tech Forum events can be viewed here.

###

From top left, clockwise - Teams Chilicare, Playspot, CityFix, and Flow Vending Machine.

Walter Rich

Connecting Communities: Georgia Tech’s Community-Engaged Research Council Drives Engagement and Impact

Dec 04, 2025 —

Grant readiness training participants and facilitators, pictured at the West Atlanta Watershed Alliance's Outdoor Activity Center. Photo includes: Kristin Janacek (BBISS), Thomas Fuentes (Cascade Springs Nature Preserve), Awaz Jabari (Refugee Women's Network), Anurupa Roy (Center for Sustainable Communities), Freddie Stevens III (Re'Gen Community Advisory), Chuck Barlow Sr. (Henderson School Alumni Association and Trust), Katie Kissel (Unearthing Farm and Market), Anna Tinoco Santiago (SCoRE), Tia Davis (ArtsXChange), Cassandra Knight (Henderson School Alumni Association and Trust), Desiree Jones (Georgia Advancing Communities Together), Alexandra Rodriguez Dalmau (SCoRE), Pabitra Poudyel (Refugee Women's Network), Katie O'Connell (Georgia Tech School of City and Regional Planning), Ruthie Yow (SCoRE), and Meena Khodayar (Refugee Women's Network)

Georgia Tech’s research enterprise is expanding its reach beyond campus walls, thanks to the work of the Community-Engaged Research (CER) Council. Formed in 2024, the council focuses on making collaborations between Georgia Tech and community partners easier, more strategic, and more impactful.

“At Georgia Tech, there’s incredible expertise in community engagement,” said Ruthie Yow SCoRE’s associate director, who facilitates the council. “But until now, there was no centralized way to connect those efforts. The council fills that gap.”

Five Pillars for Impact

The council’s strategy centers on five pillars: Coordination, Partners, Faculty Training and Recognition, Communication, and Resource Development. These priorities emerged from a strategic planning process involving seven interdisciplinary research institutes (IRIs) and centers, including Brook Byers Institute for Sustainable Systems (BBISS), Institute for People and Technology (IPaT), Strategic Energy Institute (SEI), Renewable Bioproducts Institute (RBI), the Enterprise Innovation Institute (EI²), Partnership for Inclusive Innovation (PIN) and SCoRE.

New Tool: Community Connect Website

Council members are developing new tools to support these priorities, including the brand-new Community Connect website, led by Nicole Kennard, assistant director for Community-Engaged Research in BBISS. The platform connects faculty and community partners by allowing them to create profiles, post engagement opportunities, and view an interactive map of partnerships.

“When I started this role, faculty told me they wanted to know who Georgia Tech was already working with and how to find new partners,” Kennard said. “They didn’t want to duplicate efforts or cold-call potential partners. This website addresses this challenge by showing existing connections and helping track engagement.”

The site will also serve as a data repository to measure impact of partnerships. “Having this data will help us advocate for infrastructure and support for community-engaged research,” Kennard added.

BBISS, IPaT, and more than 70 people from five of the Institute’s colleges and 18 units across GT supported the development of this new interactive site. The site is up and running while the team makes minor adjustments before a full launch in Spring 2026. Make a profile and share any website feedback with Nicole Kennard.

Building Capacity: Grant Readiness Training

In September, the council sponsored a grant readiness training for 18 community-based organizations. Led by SCoRE, the two-day workshop covered proposal basics, budgeting, logic models, and outcome measurement parameters. Over the course of two full days at the Outdoor Activity Center in West Atlanta, participants in the training helped these partners build the foundational systems, content, and strategies needed for effective grant seeking. Rather than focusing solely on writing techniques, this intensive workshop emphasized organizational readiness—equipping participants with materials such as boilerplate content, budget templates, outcome measurement frameworks, and funder research strategies. Tailored for organizations with limited staff who juggle multiple roles, the training provided practical, immediately applicable tools that support a proactive, long-term approach to securing grant funding. Read more about the training here.

Collaboration in Action: Clarkston Project

Through the leadership of council members Leigh Hopkins and Candice McKie, the council is launching a collaboration with the Center for Economic Development Research (CEDR), to support strategic visioning for the City of Clarkston after funding cuts threatened its planning process. Clarkston, Georgia, one of the most culturally diverse cities in the country, is moving into the second phase of their collaboration with CEDR. The two groups together are continuing to work on place-making, community-wide events, and creative incentives to attract and retain new businesses.

“It was a great example of pooling resources to lift up community vision and meet a community need,” Yow said.

Networking for Impact

On December 10, the council will host a networking event for faculty and staff engaged in CER. The goal is to share successes, attract new collaborators, and identify projects for 2026.

Join us at 2 p.m. in the Student Success Center, President’s Suite B , for light refreshments.

Engagement Across IRIs

Georgia Tech’s interdisciplinary research institutes are already leading impactful projects: IPaT’s CEAR Hub supports climate and cultural resilience in Georgia’s barrier islands; BBISS works on conservation and cultural sustainability with tribal Ojibwe partners; SEI’s Energy Faculty Fellows Program builds research networks with minority-serving institutions; RBI’s ReWood initiative advances renewable forest biotechnology for a climate-smart economy.

Faculty interested in learning more about CER can start by connecting with the council members. “We want to make it easy for researchers and communities to create mutually beneficial partnerships,” Yow said. “Reach out, share your work, and join us in building Georgia Tech’s impact.”

Council members include Terri Sapp (RBI), Clint Zeagler (IPaT), Nicole Kennard (BBISS), Leigh Hopkins and Candice McKie (CEDR), Yang You (SEI), Katie O'Connor (PIN), Ruthie Yow (SCoRE), and Rose Santa Gonzalez (Institute for Robotics & Intelligent Machines.)

Jennifer Martin, Assistant Director of Research Communications Services

Real-World Helper Exoskeletons Just Got Closer to Reality

Nov 19, 2025 —

Researchers Matthew Gombolay, left, and Aaron Young used the lower-limb exoskeleton demonstrated in the background to test their new approach to creating exoskeleton controllers. They use huge amounts of existing data on how people move to create functional controllers able to provide meaningful assistance. And unlike earlier controllers, they do not require hours and hours of additional training and data collection with each specific exoskeleton device.

To make useful wearable robotic devices that can help stroke patients or people with amputated limbs, the computer brains driving the systems must be trained. That takes time and money — lots of time and money. And researchers need specially equipped labs to collect mountains of human data for training.

Even when engineers have a working device and brain, called a controller, changes and improvements to the exoskeleton system typically mean data collection and training start all over again. The process is expensive and makes bringing fully functional exoskeletons or robotic limbs into the real world largely impractical.

Not anymore, thanks to Georgia Tech engineers and computer scientists.

They’ve created an artificial intelligence tool that can turn huge amounts of existing data on how people move into functional exoskeleton controllers. No data collection, retraining, and hours upon hours of additional lab time required for each specific device.

Their approach has produced an exoskeleton brain capable of offering meaningful assistance across a huge range of hip and knee movements that works as well as the best controllers currently available. Their worked was published Nov. 19 in Science Robotics.

Joshua Stewart

College of Engineering

The Nexus of Economic Security and Technology

Why Georgia Matters for South Korea: Georgia is home to some of the largest Korean investments in the United States, including Hyundai Motor Group’s $7.6 billion Metaplant–the biggest economic development project in state history–and significant supply-chain growth across the state.

Georgia Tech Ranked No. 7 Globally in Interdisciplinary Science Rankings

Nov 20, 2025 —

Georgia Institute of Technology has been ranked 7th in the world in the 2026 Times Higher Education Interdisciplinary Science Rankings, in association with Schmidt Science Fellows. This designation underscores Georgia Tech’s leadership in research that solves global challenges.

“Interdisciplinary research is at the heart of Georgia Tech’s mission,” said Tim Lieuwen, executive vice president for Research. “Our faculty, students, and research teams work across disciplines to create transformative solutions in areas such as healthcare, energy, advanced manufacturing, and artificial intelligence. This ranking reflects the strength of our collaborative culture and the impact of our research on society.”

As a top R1 research university, Georgia Tech is shaping the future of basic and applied research by pursuing inventive solutions to the world’s most pressing problems. Whether discovering cancer treatments or developing new methods to power our communities, work at the Institute focuses on improving the human condition.

Teams from all seven Georgia Tech colleges, 11 interdisciplinary research institutes, the Georgia Tech Research Institute, Enterprise Innovation Institute, and hundreds of research labs and centers work together to transform ideas into real results.

Contact: Angela Ayers

Professor Earns Test-of-Time Award at AI and Computer Gaming Conference

Nov 12, 2025 —

One of the top conferences for AI and computer games is recognizing a School of Interactive Computing professor with its first-ever test-of-time award.

At its event this week in Alberta, Canada, the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment (AIIDE) is honoring Professor Mark Riedl. The award also honors University of Utah Professor and Division of Games Chair Michael Young, Riedl’s Ph.D. advisor.

Riedl studied under Young at North Carolina State University.

Their 2005 paper, From Linear Story Generation to Branching Story Graphs, highlighted the challenges of using AI to create interactive gaming narratives in which user actions influence the story’s progression.

In 2005, computer game systems that supported linear, non-branching games were widely used. Riedl introduced an innovative mathematical formula for interactive stories ranging from choose-your-own-adventure novels to modern computer games.

“We didn’t use the term ‘generative AI’ back then, but I was working on AI for the generation of creative artifacts,” Riedl said. “This was before we had practical deep learning or large language models.

“One of the reasons this paper is still relevant 20 years later is that it didn’t just present a technology, it attempted to provide a framework for solving a grand challenge in AI.”

That challenge is still ongoing, Riedl said. Game designers continue to struggle with balancing story coherence against the amount of narrative control afforded to users.

“When users exercise a high degree of control within the environment, it is likely that their actions will change the state of the world in ways that may interfere with the causal dependencies between actions as intended within a storyline,” Riedl and Young wrote in the paper.

“Narrative mediation makes linear narratives interactive. The question is: Is the expressive power of narrative mediation at least as powerful as the story graph representation?”

AIIDE is being held this week at the University of Alberta in Edmonton, Alberta. Riedl will receive the award on Wednesday.

IPaT Research Lightning Talks

Speakers:

Aaron Gabryluk, Research Scientist, IPaT

Brian Jones, Principal Research Engineer, IPaT

Richard Starr, Director of Research Operations and Research Scientist, IPaT

Scott Gilliland, Senior Research Scientist, IPaT